Planet Python

Last update: February 06, 2026 04:44 PM UTC

February 06, 2026

Real Python

The Real Python Podcast – Episode #283: Improving Your GitHub Developer Experience

What are ways to improve how you're using GitHub? How can you collaborate more effectively and improve your technical writing? This week on the show, Adam Johnson is back to talk about his new book, "Boost Your GitHub DX: Tame the Octocat and Elevate Your Productivity".

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

February 06, 2026 12:00 PM UTC

February 05, 2026

Eli Bendersky

Rewriting pycparser with the help of an LLM

pycparser is my most widely used open source project (with ~20M daily downloads from PyPI [1]). It's a pure-Python parser for the C programming language, producing ASTs inspired by Python's own. Until very recently, it's been using PLY: Python Lex-Yacc for the core parsing.

In this post, I'll describe how I collaborated with an LLM coding agent (Codex) to help me rewrite pycparser to use a hand-written recursive-descent parser and remove the dependency on PLY. This has been an interesting experience and the post contains lots of information and is therefore quite long; if you're just interested in the final result, check out the latest code of pycparser - the main branch already has the new implementation.

The issues with the existing parser implementation

While pycparser has been working well overall, there were a number of nagging issues that persisted over years.

Parsing strategy: YACC vs. hand-written recursive descent

I began working on pycparser in 2008, and back then using a YACC-based approach for parsing a whole language like C seemed like a no-brainer to me. Isn't this what everyone does when writing a serious parser? Besides, the K&R2 book famously carries the entire grammar of the C99 language in an appendix - so it seemed like a simple matter of translating that to PLY-yacc syntax.

And indeed, it wasn't too hard, though there definitely were some complications in building the ASTs for declarations (C's gnarliest part).

Shortly after completing pycparser, I got more and more interested in compilation and started learning about the different kinds of parsers more seriously. Over time, I grew convinced that recursive descent is the way to go - producing parsers that are easier to understand and maintain (and are often faster!).

It all ties in to the benefits of dependencies in software projects as a function of effort. Using parser generators is a heavy conceptual dependency: it's really nice when you have to churn out many parsers for small languages. But when you have to maintain a single, very complex parser, as part of a large project - the benefits quickly dissipate and you're left with a substantial dependency that you constantly grapple with.

The other issue with dependencies

And then there are the usual problems with dependencies; dependencies get abandoned, and they may also develop security issues. Sometimes, both of these become true.

Many years ago, pycparser forked and started vendoring its own version of PLY. This was part of transitioning pycparser to a dual Python 2/3 code base when PLY was slower to adapt. I believe this was the right decision, since PLY "just worked" and I didn't have to deal with active (and very tedious in the Python ecosystem, where packaging tools are replaced faster than dirty socks) dependency management.

A couple of weeks ago this issue was opened for pycparser. It turns out the some old PLY code triggers security checks used by some Linux distributions; while this code was fixed in a later commit of PLY, PLY itself was apparently abandoned and archived in late 2025. And guess what? That happened in the middle of a large rewrite of the package, so re-vendoring the pre-archiving commit seemed like a risky proposition.

On the issue it was suggested that "hopefully the dependent packages move on to a non-abandoned parser or implement their own"; I originally laughed this idea off, but then it got me thinking... which is what this post is all about.

Growing complexity of parsing a messy language

The original K&R2 grammar for C99 had - famously - a single shift-reduce conflict having to do with dangling elses belonging to the most recent if statement. And indeed, other than the famous lexer hack used to deal with C's type name / ID ambiguity, pycparser only had this single shift-reduce conflict.

But things got more complicated. Over the years, features were added that weren't strictly in the standard but were supported by all the industrial compilers. The more advanced C11 and C23 standards weren't beholden to the promises of conflict-free YACC parsing (since almost no industrial-strength compilers use YACC at this point), so all caution went out of the window.

The latest (PLY-based) release of pycparser has many reduce-reduce conflicts [2]; these are a severe maintenance hazard because it means the parsing rules essentially have to be tie-broken by order of appearance in the code. This is very brittle; pycparser has only managed to maintain its stability and quality through its comprehensive test suite. Over time, it became harder and harder to extend, because YACC parsing rules have all kinds of spooky-action-at-a-distance effects. The straw that broke the camel's back was this PR which again proposed to increase the number of reduce-reduce conflicts [3].

This - again - prompted me to think "what if I just dump YACC and switch to a hand-written recursive descent parser", and here we are.

The mental roadblock

None of the challenges described above are new; I've been pondering them for many years now, and yet biting the bullet and rewriting the parser didn't feel like something I'd like to get into. By my private estimates it'd take at least a week of deep heads-down work to port the gritty 2000 lines of YACC grammar rules to a recursive descent parser [4]. Moreover, it wouldn't be a particularly fun project either - I didn't feel like I'd learn much new and my interests have shifted away from this project. In short, the Potential well was just too deep.

Why would this even work? Tests

I've definitely noticed the improvement in capabilities of LLM coding agents in the past few months, and many reputable people online rave about using them for increasingly larger projects. That said, would an LLM agent really be able to accomplish such a complex project on its own? This isn't just a toy, it's thousands of lines of dense parsing code.

What gave me hope is the concept of conformance suites mentioned by Simon Willison. Agents seem to do well when there's a very clear and rigid goal function - such as a large, high-coverage conformance test suite.

And pycparser has an very extensive one. Over 2500 lines of test code parsing various C snippets to ASTs with expected results, grown over a decade and a half of real issues and bugs reported by users.

I figured the LLM can either succeed or fail and throw its hands up in despair, but it's quite unlikely to produce a wrong port that would still pass all the tests. So I set it to run.

The initial port

I fired up Codex in pycparser's repository, and wrote this prompt just to make sure it understands me and can run the tests:

This repository implements a Python parser producing an AST. It has a very

extensive test suite; can you first verify you successfully run all the tests?

(with python3 -m unittest discover, from the root dir)

Codex figured it out (I gave it the exact command, after all!); my next prompt was the real thing [5]:

pycparser uses the PLY library for its lexer and parser, and its own AST

package for the ASTs created by the parser. I want to explore replacing the

Parser component (leaving the Lexer alone for now) with a hand written

recursive descent parser that will pass all the same tests. Can you try that?

Here Codex went to work and churned for over an hour. Having never observed an agent work for nearly this long, I kind of assumed it went off the rails and will fail sooner or later. So I was rather surprised and skeptical when it eventually came back with:

Replaced the PLY parse path with a handwritten recursive‑descent parser while

keeping the existing lexer and AST helpers intact; CParser now points to the

new RD implementation, with the old PLY version preserved as PLYCParser in

pycparser/c_parser.py. The RD parser includes a token stream, declarator/type

parsing, expression precedence handling, pragmas, and statement parsing to

match the previous AST output and coordinate behavior.

It took me a while to poke around the code and run it until I was convinced - it had actually done it! It wrote a new recursive descent parser with only ancillary dependencies on PLY, and that parser passed the test suite. After a few more prompts, we've removed the ancillary dependencies and made the structure clearer. I hadn't looked too deeply into code quality at this point, but at least on the functional level - it succeeded. This was very impressive!

A quick note on reviews and branches

A change like the one described above is impossible to code-review as one PR in any meaningful way; so I used a different strategy. Before embarking on this path, I created a new branch and once Codex finished the initial rewrite, I committed this change, knowing that I will review it in detail, piece-by-piece later on.

Even though coding agents have their own notion of history and can "revert" certain changes, I felt much safer relying on Git. In the worst case if all of this goes south, I can nuke the branch and it's as if nothing ever happened. I was determined to only merge this branch onto main once I was fully satisfied with the code. In what follows, I had to git reset several times when I didn't like the direction in which Codex was going. In hindsight, doing this work in a branch was absolutely the right choice.

The long tail of goofs

Once I've sufficiently convinced myself that the new parser is actually working, I used Codex to similarly rewrite the lexer and get rid of the PLY dependency entirely, deleting it from the repository. Then, I started looking more deeply into code quality - reading the code created by Codex and trying to wrap my head around it.

And - oh my - this was quite the journey. Much has been written about the code produced by agents, and much of it seems to be true. Maybe it's a setting I'm missing (I'm not using my own custom AGENTS.md yet, for instance), but Codex seems to be that eager programmer that wants to get from A to B whatever the cost. Readability, minimalism and code clarity are very much secondary goals.

Using raise...except for control flow? Yep. Abusing Python's weak typing (like having None, false and other values all mean different things for a given variable)? For sure. Spreading the logic of a complex function all over the place instead of putting all the key parts in a single switch statement? You bet.

Moreover, the agent is hilariously lazy. More than once I had to convince it to do something it initially said is impossible, and even insisted again in follow-up messages. The anthropomorphization here is mildly concerning, to be honest. I could never imagine I would be writing something like the following to a computer, and yet - here we are: "Remember how we moved X to Y before? You can do it again for Z, definitely. Just try".

My process was to see how I can instruct Codex to fix things, and intervene myself (by rewriting code) as little as possible. I've mostly succeeded in this, and did maybe 20% of the work myself.

My branch grew dozens of commits, falling into roughly these categories:

- The code in X is too complex; why can't we do Y instead?

- The use of X is needlessly convoluted; change Y to Z, and T to V in all instances.

- The code in X is unclear; please add a detailed comment - with examples - to explain what it does.

Interestingly, after doing (3), the agent was often more effective in giving the code a "fresh look" and succeeding in either (1) or (2).

The end result

Eventually, after many hours spent in this process, I was reasonably pleased with the code. It's far from perfect, of course, but taking the essential complexities into account, it's something I could see myself maintaining (with or without the help of an agent). I'm sure I'll find more ways to improve it in the future, but I have a reasonable degree of confidence that this will be doable.

It passes all the tests, so I've been able to release a new version (3.00) without major issues so far. The only issue I've discovered is that some of CFFI's tests are overly precise about the phrasing of errors reported by pycparser; this was an easy fix.

The new parser is also faster, by about 30% based on my benchmarks! This is typical of recursive descent when compared with YACC-generated parsers, in my experience. After reviewing the initial rewrite of the lexer, I've spent a while instructing Codex on how to make it faster, and it worked reasonably well.

Followup - static typing

While working on this, it became quite obvious that static typing would make the process easier. LLM coding agents really benefit from closed loops with strict guardrails (e.g. a test suite to pass), and type-annotations act as such. For example, had pycparser already been type annotated, Codex would probably not have overloaded values to multiple types (like None vs. False vs. others).

In a followup, I asked Codex to type-annotate pycparser (running checks using ty), and this was also a back-and-forth because the process exposed some issues that needed to be refactored. Time will tell, but hopefully it will make further changes in the project simpler for the agent.

Based on this experience, I'd bet that coding agents will be somewhat more effective in strongly typed languages like Go, TypeScript and especially Rust.

Conclusions

Overall, this project has been a really good experience, and I'm impressed with what modern LLM coding agents can do! While there's no reason to expect that progress in this domain will stop, even if it does - these are already very useful tools that can significantly improve programmer productivity.

Could I have done this myself, without an agent's help? Sure. But it would have taken me much longer, assuming that I could even muster the will and concentration to engage in this project. I estimate it would take me at least a week of full-time work (so 30-40 hours) spread over who knows how long to accomplish. With Codex, I put in an order of magnitude less work into this (around 4-5 hours, I'd estimate) and I'm happy with the result.

It was also fun. At least in one sense, my professional life can be described as the pursuit of focus, deep work and flow. It's not easy for me to get into this state, but when I do I'm highly productive and find it very enjoyable. Agents really help me here. When I know I need to write some code and it's hard to get started, asking an agent to write a prototype is a great catalyst for my motivation. Hence the meme at the beginning of the post.

Does code quality even matter?

One can't avoid a nagging question - does the quality of the code produced by agents even matter? Clearly, the agents themselves can understand it (if not today's agent, then at least next year's). Why worry about future maintainability if the agent can maintain it? In other words, does it make sense to just go full vibe-coding?

This is a fair question, and one I don't have an answer to. Right now, for projects I maintain and stand behind, it seems obvious to me that the code should be fully understandable and accepted by me, and the agent is just a tool helping me get to that state more efficiently. It's hard to say what the future holds here; it's going to interesting, for sure.

| [1] | pycparser has a fair number of direct dependents, but the majority of downloads comes through CFFI, which itself is a major building block for much of the Python ecosystem. |

| [2] | The table-building report says 177, but that's certainly an over-dramatization because it's common for a single conflict to manifest in several ways. |

| [3] | It didn't help the PR's case that it was almost certainly vibe coded. |

| [4] | There was also the lexer to consider, but this seemed like a much simpler job. My impression is that in the early days of computing, lex gained prominence because of strong regexp support which wasn't very common yet. These days, with excellent regexp libraries existing for pretty much every language, the added value of lex over a custom regexp-based lexer isn't very high. That said, it wouldn't make much sense to embark on a journey to rewrite just the lexer; the dependency on PLY would still remain, and besides, PLY's lexer and parser are designed to work well together. So it wouldn't help me much without tackling the parser beast. |

| [5] | I've decided to ask it to the port the parser first, leaving the lexer alone. This was to split the work into reasonable chunks. Besides, I figured that the parser is the hard job anyway - if it succeeds in that, the lexer should be easy. That assumption turned out to be correct. |

February 05, 2026 11:38 AM UTC

PyBites

Building Useful AI with Asif Pinjari

I interview a lot of professionals and developers, from 20-year veterans to people just starting out on their Python journey.

But my conversation with Asif Pinjari was different.

Asif is still a student (and a Teaching Assistant) at Northern Arizona University. Usually, when I talk to people at this stage of their life and career, they’re completely focused on passing tests or mastering syntax.

Asif on the other hand, is doing something else: He’s doing things the Pybites way! He’s building with a focus on providing value.

We spent a lot of time discussing a problem I’m seeing quite often now: developers who limit themselves with AI. That is, they learn how to make an API call to OpenAI and call it a day.

But as Asif pointed out during the show, that’s not engineering. That’s just wrapping a product.

Using AI Locally

One of the most impressive parts of our chat was Asif’s take on privacy. He thought ahead and isn’t just blindly sending data to ChatGPT. He’s already caught on to the very real business constraint: Companies are terrified of their data leaking.

Instead of letting that be a barrier, he went down the rabbit hole of Local LLMs (using tools like Ollama) to build systems that run privately.

This to me is the difference between a student mindset and an engineer mindset.

- Student: “How do I get the code to run?”

- Engineer: “How do I solve the user’s problem as safely and securely as possible?”

It Started With a Calculator

We also traced this back to his childhood. Asif told a great story about being a kid and just staring at a calculator, trying to figure out how it knew the answer.

It reminded me that it’s that kind of curiosity (the desire to look under the hood and understand the nuts and bolts) is exactly what’s missing these days. Living and breathing tech from a young age is exactly why so many of us got into tech in the first place!

Enjoy this episode. It’s inspired me to keep building, that’s for sure!

– Julian

Follow Asif

Portfolio – https://asifflix.vercel.app/

github- https://github.com/Asif-0209

Linkedin – https://www.linkedin.com/in/asif-p-056530232/

Listen and Subscribe Here

February 05, 2026 10:20 AM UTC

Stéphane Wirtel

Certified AI Developer - A Journey of Growth, Grief, and New Beginning

✨ The Certification Just Arrived!

I just received word from Alyra – I’ve officially completed the AI Developer Certification, successfully mastering the fundamentals of Machine Learning and Deep Learning! 🎉

These past three months have been nothing short of transformative. Not just technically, but personally too. This certification represents far more than finishing a course — it’s about rediscovering mathematics, overcoming personal challenges, and proving to myself that I can learn deeply, even when everything feels impossible.

February 05, 2026 12:00 AM UTC

Peter Hoffmann

Local macOS Dev Setup: dnsmasq + caddy-snake for python projects

When working on a single web project, running flask run on a fixed port is

usually more than sufficient. However, as soon as you start developing

multiple services in parallel, this approach quickly becomes cumbersome:

ports collide, you have to remember which service runs on which port, and you

end up constantly starting, stopping, and restarting individual development

servers by hand.

Using a wildcard local domain (*.lan) throuhg dnsmask and a vhost proxy with

proper WSGI services solves these problems cleanly. Each project gets a stable,

memorable local subdomain instead of a port number, services can run side by

side without collisions, and process management becomes centralized and

predictable. The result is a local development setup with less friction.

dnsmasq on macOS Sonoma (Local DNS with .lan)

This is a concise summary of how to install and configure dnsmasq on macOS

Sonoma to resolve local development domains using a .lan wildcard (e.g.

*.lan → 127.0.0.1).

1. Install dnsmasq

Using Homebrew:

brew install dnsmasq

Homebrew (on Apple Silicon) installs dnsmasq and places the default config in:

/opt/homebrew/etc/dnsmasq.conf

2. Configure dnsmasq

Edit the configuration file:

sudo vim /opt/homebrew/etc/dnsmasq.conf

Add the following:

# Listen only on localhost

listen-address=127.0.0.1

bind-interfaces

# DNS port

port=53

# Wildcard domain for local development

address=/.lan/127.0.0.1

This maps any *.lan hostname to 127.0.0.1.

It's recommended to not use .dev as this is real Google owned TLD and browsers

have baked in to use HTTPS only. Also don't use .local as this is reserved for

mDNS (Bonjour).

3. Tell macOS to use dnsmasq

macOS ignores /etc/resolv.conf, so DNS must be configured per network interface.

Option A: System Settings (GUI)

- System Settings → Network

- Select your active interface (Wi-Fi / Ethernet)

- Details → DNS

- Add:

127.0.0.1

- Move it to the top of the DNS server list

Option B: Command line

networksetup -setdnsservers Wi-Fi 127.0.0.1

(Replace Wi-Fi with the correct interface name if needed.)

4. Start dnsmasq

Run it as a background service:

sudo brew services start dnsmasq

Or run it manually for debugging:

sudo dnsmasq --no-daemon

5. Flush DNS cache

This step is required on Sonoma:

sudo dscacheutil -flushcache

sudo killall -HUP mDNSResponder

6. Test

dig foo.lan

ping foo.lan

Both should resolve to:

127.0.0.1

Result

You now have:

- dnsmasq running on

127.0.0.1 - Wildcard local DNS via

*.lan - Fully compatible behavior with macOS Sonoma

Caddy

Note: My initial plan was to use caddy with caddy-snake to run multiple

vhosts for python wsgi apps with the configuration below. But this did

not work out as expected because caddy-snake does not run multiple python

interpreters for the different projects, but only appends the site packages

from the python projects to sys.path for all projects and runs all of them in

the same python interpreter. This leads to problems with different python

versions or incompatible python requirements installed in the different venv

versions. So the approach below only works if you use the same python version

and your requirements are compatible within the different apps.

Caddyfile: host multiple WSGI services

As we want caddy to run wsgi services we need to build caddy-snake:

The caddyfile now needs to be stored in $(brew --prefix)/etc/Caddyfile

{

auto_https off

}

http://foo.lan {

bind 127.0.0.1

route {

python {

module_wsgi app:app

working_dir /Users/you/dev/foo

venv /Users/you/dev/foo/.venv

}

}

log {

output stdout

format console

}

}

http://bar.lan {

bind 127.0.0.1

route {

python {

module_wsgi app:app

working_dir /Users/you/dev/bar

venv /Users/you/dev/bar/.venv

}

}

}

February 05, 2026 12:00 AM UTC

February 04, 2026

Django Weblog

Recent trends in the work of the Django Security Team

Yesterday, Django issued security releases mitigating six vulnerabilities of varying severity. Django is a secure web framework, and that hasn’t changed. What feels new is the remarkable consistency across the reports we receive now.

Almost every report now is a variation on a prior vulnerability. Instead of uncovering new classes of issues, these reports explore how an underlying pattern from a recent advisory might surface in a similar code path or under a slightly different configuration. These reports are often technically plausible but only sometimes worth fixing. Over time, this has shifted the Security Team’s work away from discovery towards deciding how far a given precedent should extend and whether the impact of the marginal variation rises to the level of a vulnerability.

Take yesterday’s releases:

We patched a “low” severity user enumeration vulnerability in the mod_wsgi authentication handler (CVE 2025-13473). It’s a straightforward variation on CVE 2024-39329, which affected authentication more generally.

We also patched two potential denial-of-service vulnerabilities when handling large, malformed inputs. One exploits inefficient string concatenation in header parsing under ASGI (CVE 2025-14550). Concatenating strings in a loop is known to be slow, and we’ve done fixes in public where the impact is low. The other one (CVE 2026-1285) exploits deeply nested entities. December’s vulnerability in the XML serializer (CVE 2025-64460) was about those very two themes.

Finally, we also patched three potential SQL injection vulnerabilities. One envisioned a developer passing unsanitized user input to a niche feature of the PostGIS backend (CVE 2026-1207), much like CVE 2020-9402. Our security reporting policy assumes that developers are aware of the risks when passing unsanitized user input directly to the ORM. But the division between SQL statements and parameters is well ingrained, and the expectation is that Django will not fail to escape parameters. The last two vulnerabilities (CVE 2026-1287 and CVE 2026-1312) targeted user-controlled column aliases, the latest in a stream of reports stemming from CVE 2022-28346, involving unpacking **kwargs into .filter() and friends, including four security releases in a row in late 2025. You might ask, “who would unpack **kwargs into the ORM?!” But imagine letting users name aggregations in configurable reports. You would have something more like a parameter, and so you would appreciate some protection against crafted inputs.

On top of all that, on a nearly daily basis we get reports duplicating other pending reports, or even reports about vulnerabilities that have already been fixed and publicized. Clearly, reporters are using LLMs to generate (initially) plausible variations.

Security releases come with costs to the community. They interrupt our users’ development workflows, and they also severely interrupt ours.

There are alternatives. The long tail of reports about user-controlled aliases presents an obvious one: we can just re-architect that area. (Thanks to Simon Charette for a pull request doing just that!) Beyond that, there are more drastic alternatives. We can confirm fewer vulnerabilities by placing a higher value on a user's duty to validate inputs, placing a lower value on our prior precedents, or fixing lower severity issues publicly. The risk there is underreacting, or seeing our development workflow disrupted anyway when a decision not to confirm a vulnerability is challenged.

Reporters are clearly benefiting from our commitment to being consistent. For the moment, the Security Team hopes that reacting in a consistent way—even if it means sometimes issuing six patches—outweighs the cost of the security process. It’s something we’re weighing.

As always, keep the responsibly vetted reports coming to security@djangoproject.com.

February 04, 2026 04:00 PM UTC

Python Morsels

Is it a class or a function?

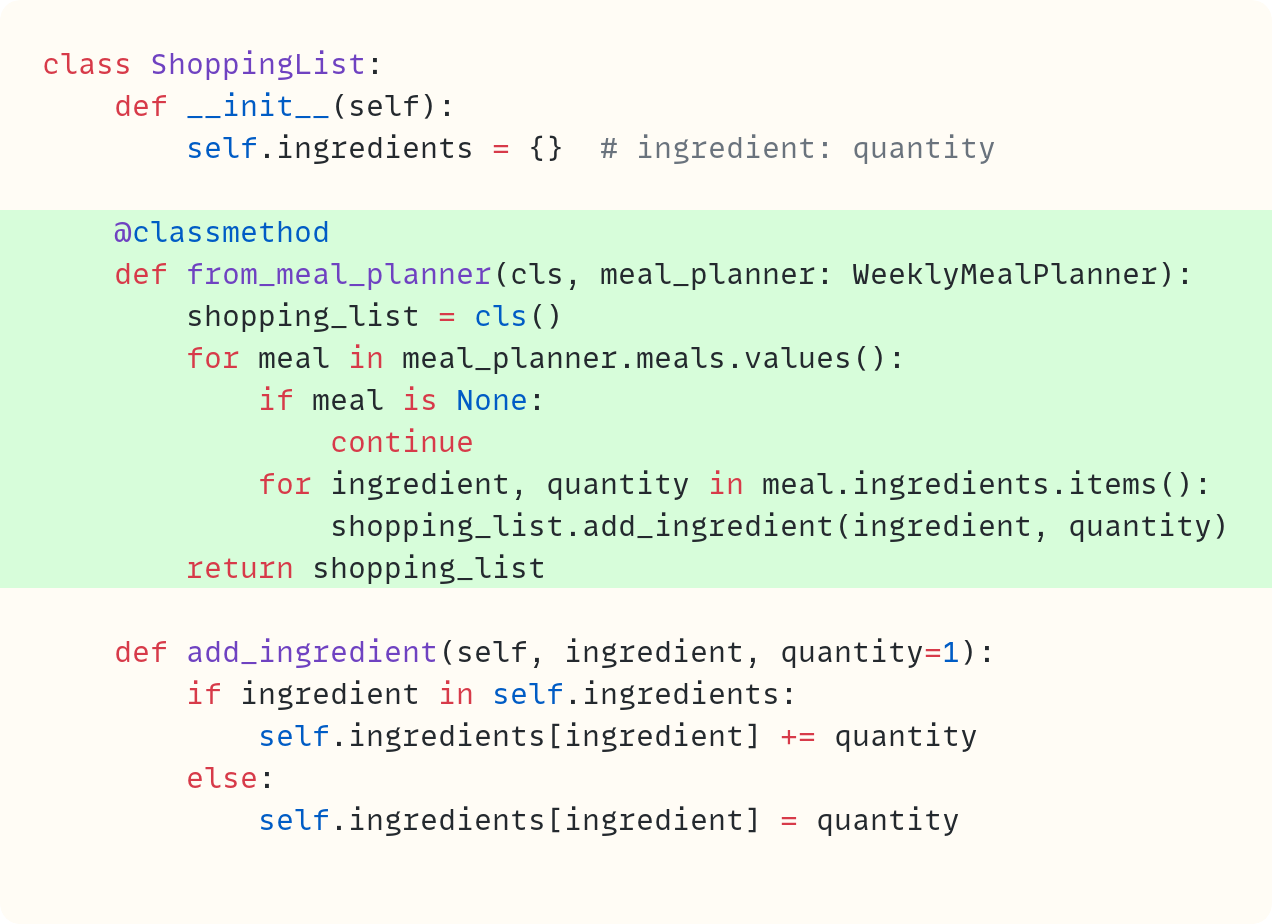

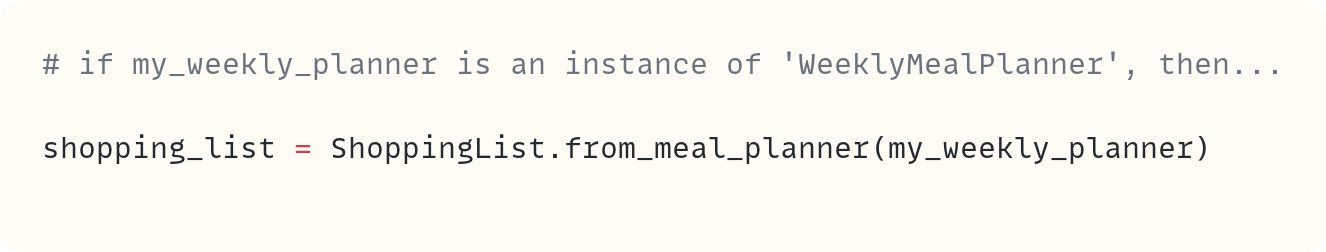

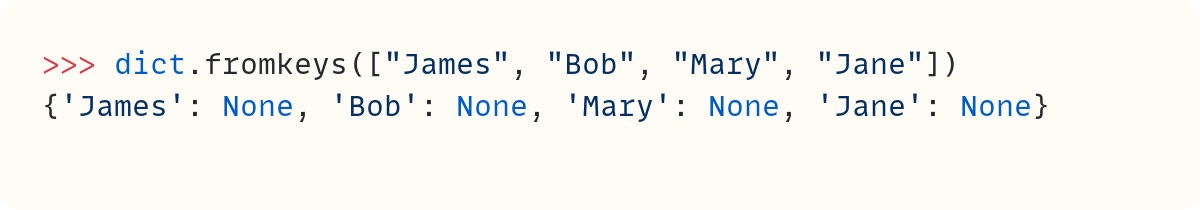

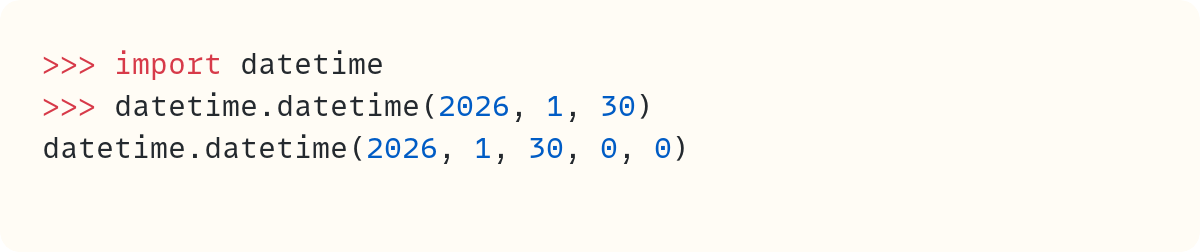

If a callable feels like a function, we often call it a function... even when it's not!

Classes are callable in Python

To create a new instance of a class in Python, we call the class:

>>> from collections import Counter

>>> counts = Counter()

Calling a class returns a new instance of that class:

>>> counts

Counter()

That is, an object whose type is that class:

>>> type(counts)

<class 'collections.Counter'>

In Python, the syntax for calling a function is the same as the syntax for making a new class instance.

Both functions and classes can be called. Functions are called to evaluate the code in that function, and classes are called to make a new instance of that class.

print

Is print a class or …

Read the full article: https://www.pythonmorsels.com/class-or-function/

February 04, 2026 03:30 PM UTC

Robin Wilson

Pharmacy late-night opening hours analysis featured in the Financial Times

Some data analysis I’ve done has been featured in the Financial Times today – see this article (the link may not work any more unless you have a FT subscription – sorry).

The brief story is that I had terrible back pain over Christmas, and spoke to an out-of-hours GP on the phone who prescribed me some muscle relaxant and some strong painkillers. This was at 9pm on a Sunday, so I asked her where my wife could go to pick up the prescription and was told that the closest pharmacy that was open was about 45-60mins drive away.

I’ve lived in Southampton for 18 years now, and years ago I knew there were multiple pharmacies in Southampton that were open until late at night – and one that I think was open 24 hours a day. I was intrigued to see how much this had changed, and managed to find a NHS dataset giving opening hours for all NHS community pharmacies. These data are available back to 2022 – so only ~3 years ago – but I thought I’d have a look.

The results shocked me: between 2022 and 2025, the number of pharmacies open past 9pm on a week day has decreased by approximately 95%! And there are now large areas of the country where there are no pharmacies open past 9pm on any week day.

I mentioned this to some friends, one of them put me in touch with a journalist, I sent them the data and was interviewed by them and the result is this article. In case you can’t access it, I’ll quote a couple of the key paragraphs here:

The number of late-night pharmacies in England has fallen more than 90 per cent in three years, according to an analysis of NHS data, raising fresh concerns about patient access to out-of-hours care.

…

The analysis of official NHS records was carried out by geospatial software engineer Robin Wilson and verified by the FT. Wilson, from Southampton, crunched the numbers after his wife had to make a two-hour round trip to collect his prescription for back pain that left him “immobilised”.

I’ll aim to write another post about how I did the analysis sometime, but it was mosly carried out using Python and pandas, along with some maps via GeoPandas and Folium. The charts and maps in the article were produced by the FT in their house style.

February 04, 2026 02:10 PM UTC

Real Python

Why You Should Attend a Python Conference

The idea of attending a Python conference can feel intimidating. You might wonder if you know enough, if you’ll fit in, or if it’s worth your time and money. In this guide, you’ll learn about the different types of Python conferences, what they actually offer, who they’re for, and how attending one can support your learning, confidence, and connection to the wider Python community.

Prerequisites

This guide is for all Python users who want to grow their Python knowledge, get involved with the Python community, or explore new professional opportunities. Your level of experience with Python doesn’t matter, and neither does whether you use Python professionally or as a hobbyist—regularly or only from time to time. If you use Python, you’re a Python developer, and Python conferences are for Python developers!

Brett Cannon, a CPython core developer, once said:

I came for the language, but I stayed for the community. (Source)

If you want to experience this feeling firsthand, then this guide is for you.

Get Your PDF: Click here to download a PDF with practical tips to help you feel prepared for your first Python conference.

Understand What Python Conferences Actually Offer

Attending a Python conference offers several distinct benefits that generally fall into three categories:

-

Personal growth: Learn new concepts, tools, and best practices through talks, tutorials, and hands-on sessions that help you deepen your Python skills and build confidence.

-

Community involvement: Meet other Python users in person, connect with open-source contributors and maintainers, and experience the collaborative culture that defines the Python community.

-

Professional opportunities: Discover potential job openings, meet companies using Python across industries, and form professional connections that can lead to future projects or roles.

The following sections explore each category in more detail to help you recognize what matters most to you when choosing a Python conference.

Personal Growth

One of the biggest benefits of attending a Python conference is the opportunity for personal growth through active learning and engagement.

Python conferences are organized around a program of talks, tutorials, and collaborative sessions that expose you to new ideas, tools, and ways of thinking about Python. The number of program items can range from one at local meetups to over one hundred at larger conferences like PyCon US and EuroPython.

At larger events, you’re exposed to a wide breadth of topics to choose from, while at smaller events, you have fewer options but can usually attend all the sessions you’re interested in. Conference talks are an excellent opportunity to get exposed to new ideas, hear about new tools, or just listen to someone else talk about a topic you’re familiar with, which can be a very educational experience!

Most of these talks are later shared on YouTube, but attending in person allows you to participate in live Q&A sessions where speakers answer audience questions directly. You also have the chance to meet the speaker after the talk and ask follow-up questions that wouldn’t be possible when watching a recording.

Tutorials, on the other hand, are rarely recorded. They tend to be longer than talks and focus on hands-on coding, making them a brilliant way to gain practical, working knowledge of a Python feature or tool. Working through exercises with peers and asking questions in real time can help solidify your understanding of a topic.

Some conferences also include collaborative sprint events, where you get together with other attendees to contribute to open-source projects, typically with the guidance of the project maintainers themselves:

EuroPython Attendees Collaborating During the Sprints (Image: Braulio Lara)

EuroPython Attendees Collaborating During the Sprints (Image: Braulio Lara)

Participating in sprints under the mentorship of the project maintainers is a great way to boost your confidence in your skills and get some open-source contributions under your belt.

Community Involvement

Developers are used to collaborating on open-source projects with people around the world, but working together online isn’t the same as having a face-to-face conversation. Python conferences fill that gap by giving developers a dedicated space to meet and connect in person.

Read the full article at https://realpython.com/python-conference-guide/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

February 04, 2026 02:00 PM UTC

Seth Michael Larson

Dumping Nintendo e‑Reader Card “ROMs”

The Nintendo e‑Reader was a peripheral released for the Game Boy Advance in 2001. The Nintendo e‑Reader allowed scanning “dotcode strips” to access extra content within games or to play mini-games. Today I'll show you how to use the GB Operator, a Game Boy ROM dumping tool, in order to access the ROM encoded onto e‑Reader card dotcodes.

I'll be demonstrating using a new entrant to e‑Reader game development for the venerable platform: Retro Dot Codes by Matt Greer. Matt regularly posts about his process developing and printing e‑Reader cards and games in 2026. I was a recipient for one of his free e‑Reader card giveaways and purchased Retro Dot Cards “Series 1” pack which I'm planning to open and play for the blog.

Dumping a Nintendo e-Reader card contents

The process is straightforward but requires a working GBA or equivalent (GBA, GBA SP, Game Boy Player, DS, or Analogue Pocket *), a Nintendo e-Reader cartridge, and a GBA ROM dumper like GB Operator. Launch the e‑Reader cartridge using a Game Boy Advance, Analogue Pocket, or Game Boy Player. The e-Reader software prompts you to scan the dotcodes.

The Solitaire card stores its program data on two “long” dotcode strips consisting of 28 “blocks” per-strip encoding 104 bytes-per-block for a total of 5824 bytes on two strips (2×28×104=5824). If you want to see approximately how large a dotcode strip is, open this page in a desktop browser. After scanning each side of the Solitaire card you can play Solitaire on your console:

I'll be honest, I was never into Solitaire as a kid, I was more “Space Cadet Pinball” on Windows... Anyways, let's archive the “ROM” of this game so even if we lose the physical card we can still play.

Turn off your device and connect the e‑Reader cartridge to the GB Operator. Following the steps I documented for Ubuntu, start “Epilogue Playback” software and dump the e‑Reader ROM and critically: the save data. The Nintendo e‑Reader supports saving your already scanned game in the save data of the cartridge so you can play again next time you boot without needing to re-scan the cards.

Now we have a .sav file. This file works as an archive of the

program, as we can load our e-Reader ROM and this save into a GBA emulator to play again.

Success!

Examining e-Reader Card ROMs

Now that we have the .sav file for the Solitaire ROM, let's see

what we can find inside. The file itself

is mostly empty, consisting almost entirely of 0xFF and 0x00 bytes:

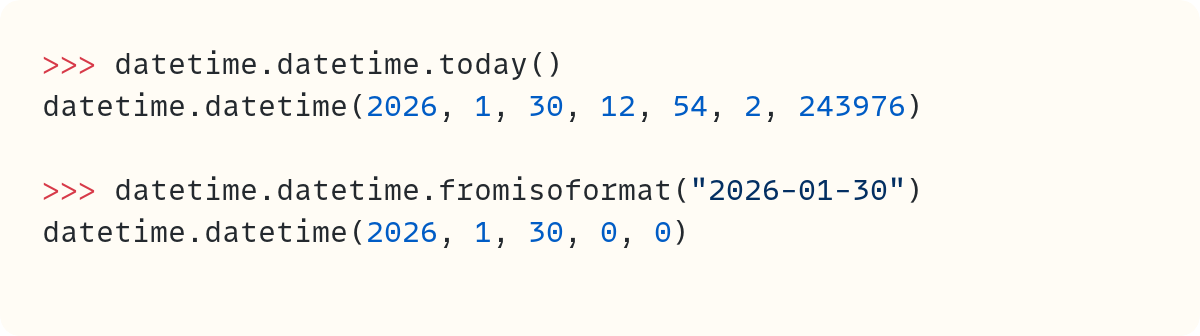

>>> data = open("Solitaire.sav", "rb").read()

>>> len(data), hex(len(data))

(131072, '0x20000')

>>> sum(b == 0xFF for b in data)

118549

>>> sum(b == 0x00 for b in data)

8200

We know from the data limits of 2 dotcode strips that there's only 5824 bytes maximum for program data. If we look at the format of e‑Reader save files documented at caitsith2.com we can see what this data means. I've duplicated the page below, just in case:

ereader save format.txt

US E-reader save format

Base Address = 0x00000 (Bank 0, address 0x0000)

Offset Size Description

0x0000 0x6FFC Bank 0 continuation of save data.

0x6FFD 0x6004 All 0xFF

0xD000 0x0053 43617264 2D452052 65616465 72203230

30310000 67B72B2E 32333333 2F282D2E

31323332 302B2B30 31323433 322F2A2C

30333333 312F282C 30333233 3230292D

30303131 2F2D2320 61050000 80FD7700

000001

0xD053 0x0FAD All 0x00s

0xE000 0x1000 Repeat 0xD000-0xDFFF

0xF000 0x0F80 All 0xFFs

0xFF80 0x0080 All 0x00s

Base Address = 0x10000 (Bank 1, address 0x0000)

Offset Size Description

0x0000 0x04 CRC (calculated starting from 0x0004, and amount of data to calculate is

0x30 + [0x002C] + [0x0030].)

0x0004 0x24 Program Title (Null terminated) - US = Straight Ascii, Jap = Shift JIS

0x0028 0x04 Program Type

0x0204 = ARM/THUMB Code/data (able to use the GBA hardware directly, Linked to 0x02000000)

0x0C05 = 6502 code/data (NES limitations, 1 16K program rom + 1-2 8K CHR rom, mapper 0 and 1)

0x0400 = Z80 code/data (Linked to 0x0100)

0x002C 0x04 Program Size

= First 2 bytes of Program data, + 2

0x0030 0x04 Unknown

0x0034 Program Size Program Data (vpk compressed)

First 2 bytes = Size of vpk compressed data

0xEFFF 0x01 End of save area in bank 1. Resume save data in bank 0.

The CRC is calculated on Beginning of Program Title, to End of Program Data.

If the First byte of Program Title is 0xFF, then there is no save present.

If the CRC calculation does not match stored CRC, then the ereader comes up with

an ereader memory error.

CRC calculation Details

CRC table is calculated from polynomial 0x04C11DB7 (standard CRC32 polynomial)

with Reflection In. (Table entry 0 is 0, and 1 is 0x77073096...)

CRC calculation routine uses Initial value of 0xAA478422. The Calculation routine

is not a standard CRC32 routine, but a custom made one, Look in "crc calc.c" for

the complete calculation algorithm.

Revision history

v1.0 - First release

V1.1 - Updated/Corrected info about program type.

v1.2 - Updated info on Japanese text encoding

v1.3 - Info on large 60K+ vpk files.

From this format specification we can see that

the program data starts around offset 0x10000

with the CRC, the program title, type, size,

and the program data which is compressed

using the VPK0 compression algorithm.

Searching through our save data, sure enough we see some

data at the offsets we expect like the program title and the

VPK0 magic bytes vpk0:

>>> hex(data.index(b"Solitaire\x00"))

'0x10004'

>>> hex(data.index(b"vpk0"))

'0x10036'

We know that the VPK0-compressed blob length is encoded in the two bytes before the magic header, little-endian. Let's grab that value and write the VPK0-compressed blob to a new file:

>>> vpk_idx = data.index(b"vpk0")

>>> vpk_len = int.from_bytes(

... data[vpk_idx-2:vpk_idx], "little")

>>> with open("Solitaire.vpk", "wb") as f:

... f.write(data[vpk_idx:vpk_idx+vpk_len])

In order to decompress the program data we'll need

a tool that can decompress VPK0. The e‑Reader development

tools repository points to nevpk.

You can download the source code

for multi-platform support and compile using cmake:

curl -L https://github.com/breadbored/nedclib/archive/749391c049756dc776b313c87da24b7f47b78eea.zip \

-o nedclib.zip

unzip nedclib.zip

cmake . && make

# Now we can use nevpk to decompress the program.

nevpk -d -i Solitaire.vpk -o Solitaire.bin

md5sum Solitaire.bin

3a898e8e1aedc091acbf037db6683a41 Solitaire.bin

This Solitaire.bin file is the original binary that Matt compiled

before compressing, adding headers, and printing the

program onto physical cards. Pretty cool that we can

reverse the process this far!

Nintendo e-Reader and Analogue Pocket

The Analogue Pocket is a hardware emulator that uses an FPGA to emulate multiple retro gaming consoles, including the GBA. One of the prominent features of this device is its cartridge slot, allowing you to play cartridges without dumping them to ROM files first.

But there's just one problem with using the Analogue Pocket with the Nintendo e-Reader. The cartridge slot is placed low on the device, making it impossible to insert oddly-shaped cartridges like the Nintendo e-Reader. Enter the E-Reader Extender! This project by Brian Hargrove extends the cartridge slot while giving your Analogue Pocket a big hug.

Playing Nintendo e-Reader games on Delta Emulator

The best retro emulator is the one you bring with you, for this reason the Delta Emulator is my emulator of choice as it runs on iOS. However, there are challenges to running e-Reader games on Delta: specifically that Delta only allows one save file per GBA ROM. This means to change games you'd need to import a new e-Reader save file. Delta stores ROMs and saves by their ROM checksum (MD5).

Thanks for keeping RSS alive! ♥

February 04, 2026 12:00 AM UTC

February 03, 2026

PyCoder’s Weekly

Issue #720: Subprocess, Memray, Callables, and More (Feb. 3, 2026)

#720 – FEBRUARY 3, 2026

View in Browser »

Ending 15 Years of subprocess Polling

Python’s standard library subprocess module relies on busy-loop polling to determine whether a process has completed yet. Modern operating systems have callback mechanisms to do this, and Python 3.15 will now take advantage of these.

GIAMPAOLO RODOLA

Django: Profile Memory Usage With Memray

Memory usage can be hard to keep under control in Python projects. Django projects can be particularly susceptible to memory bloat, as they may import many large dependencies. Learn how to use memray to learn what is going on.

ADAM JOHNSON

B2B Authentication for any Situation - Fully Managed or BYO

What your sales team needs to close deals: multi-tenancy, SAML, SSO, SCIM provisioning, passkeys…What you’d rather be doing: almost anything else. PropelAuth does it all for you, at every stage. →

PROPELAUTH sponsor

Create Callable Instances With Python’s .__call__()

Learn Python callables: what “callable” means, how to use dunder call, and how to build callable objects with step-by-step examples.

REAL PYTHON course

Articles & Tutorials

The C-Shaped Hole in Package Management

System package managers and language package managers are solving different problems that happen to overlap in the middle. This causes complications when languages like Python depend on system libraries. This article is a deep dive on the different pieces involved and why it is the way it is.

ANDREW NESBITT

Use \z Not $ With Python Regular Expressions

The $ in a regular expression is used to matching the end of a line, but in Python, it matches a line end both with and without a \n. Python 3.14 added support for \z, which is widely supported by other languages, to get around this problem.

SETH LARSON

Python errors? Fix ‘em fast for FREE with Honeybadger

If you support web apps in production, you need intelligent logging with error alerts and de-duping. Honeybadger filters out the noise and transforms Python logs into contextual issues so you can find and fix errors fast. Get your FREE account →

HONEYBADGER sponsor

Speeding Up Pillow’s Open and Save

Hugo used the Tachyon profiler to examine the open and save calls in the Pillow image processing module. He found ways to optimize the calls and has submitted a PR, this post tells you about it.

HUGO VAN KEMENADE

Some Notes on Starting to Use Django

Julia has decided to add Django to her coding skills and has written some notes from her first experiences. See also the associated HN discussion

JULIA EVANS

How Long Does It Take to Learn Python?

This guide breaks down how long it takes to learn Python, with realistic timelines, weekly study plans, and strategies to speed up your progress.

REAL PYTHON

uv Cheatsheet

uv cheatsheet that lists the most common and useful uv commands across project management, working with scripts, installing tools, and more!

MATHSPP.COM • Shared by Rodrigo Girão Serrão

What’s New in pandas 3

pandas 3.0 has just been released. This article uses a real‑world example to explain the most important differences between pandas 2 and 3.

MARC GARCIA

GeoPandas Basics: Maps, Projections, and Spatial Joins

Dive into GeoPandas with this tutorial covering data loading, mapping, CRS concepts, projections, and spatial joins for intuitive analysis.

REAL PYTHON

Things I’ve Learned in My 10 Years as an Engineering Manager

Non-obvious advice that Jampa wishes he’d learned sooner. Associated HN Discussion

JAMPA UCHOA

Django Views Versus the Zen of Python

Django’s generic class-based views often clash with the Zen of Python. Here’s why the base View class feels more Pythonic.

KEVIN RENSKERS

Projects & Code

Events

Weekly Real Python Office Hours Q&A (Virtual)

February 4, 2026

REALPYTHON.COM

Canberra Python Meetup

February 5, 2026

MEETUP.COM

Sydney Python User Group (SyPy)

February 5, 2026

SYPY.ORG

PyDelhi User Group Meetup

February 7, 2026

MEETUP.COM

PiterPy Meetup

February 10, 2026

PITERPY.COM

Leipzig Python User Group Meeting

February 10, 2026

MEETUP.COM

Happy Pythoning!

This was PyCoder’s Weekly Issue #720.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

February 03, 2026 07:30 PM UTC

Mike Driscoll

Python Typing Book Kickstarter

Python has had type hinting support since Python 3.5, over TEN years ago! However, Python’s type annotations have changed repeatedly over the years. In Python Typing: Type Checking for Python Programmers, you will learn all you need to know to add type hints to your Python applications effectively.

You will also learn how to use Python type checkers, configure them, and set them up in pre-commit or GitHub Actions. This knowledge will give you the power to check your code and your team’s code automatically before merging, hopefully catching defects before they make it into your products.

What You’ll Learn

You will learn all about Python’s support for type hinting (annotations). Specifically, you will learn about the following topics:

- Variable annotations

- Function annotations

- Type aliases

- New types

- Generics

- Hinting callables

- Annotating TypedDict

- Annotating Decorators and Generators

- Using Mypy for type checking

- Mypy configuration

- Using ty for type checking

- ty configuration

- and more!

Rewards to Choose From

There are several different rewards you can get in this Kickstarter:

- A signed paperback copy of the book (See Stretch Goals)

- An eBook copy of the book in PDF and ePub

- A t-shirt with the cover art from the book (See Stretch Goals)

- Other Python eBooks

The post Python Typing Book Kickstarter appeared first on Mouse Vs Python.

February 03, 2026 06:17 PM UTC

Django Weblog

Django security releases issued: 6.0.2, 5.2.11, and 4.2.28

In accordance with our security release policy, the Django team is issuing releases for Django 6.0.2, Django 5.2.11, and Django 4.2.28. These releases address the security issues detailed below. We encourage all users of Django to upgrade as soon as possible.

CVE-2025-13473: Username enumeration through timing difference in mod_wsgi authentication handler

The django.contrib.auth.handlers.modwsgi.check_password() function for authentication via mod_wsgi allowed remote attackers to enumerate users via a timing attack.

Thanks to Stackered for the report.

This issue has severity "low" according to the Django security policy.

CVE-2025-14550: Potential denial-of-service vulnerability via repeated headers when using ASGI

When receiving duplicates of a single header, ASGIRequest allowed a remote attacker to cause a potential denial-of-service via a specifically created request with multiple duplicate headers. The vulnerability resulted from repeated string concatenation while combining repeated headers, which produced super-linear computation resulting in service degradation or outage.

Thanks to Jiyong Yang for the report.

This issue has severity "moderate" according to the Django security policy.

CVE-2026-1207: Potential SQL injection via raster lookups on PostGIS

Raster lookups on GIS fields (only implemented on PostGIS) were subject to SQL injection if untrusted data was used as a band index.

As a reminder, all untrusted user input should be validated before use.

Thanks to Tarek Nakkouch for the report.

This issue has severity "high" according to the Django security policy.

CVE-2026-1285: Potential denial-of-service vulnerability in django.utils.text.Truncator HTML methods

django.utils.text.Truncator.chars() and Truncator.words() methods (with html=True) and truncatechars_html and truncatewords_html template filters were subject to a potential denial-of-service attack via certain inputs with a large number of unmatched HTML end tags, which could cause quadratic time complexity during HTML parsing.

Thanks to Seokchan Yoon for the report.

This issue has severity "moderate" according to the Django security policy.

CVE-2026-1287: Potential SQL injection in column aliases via control characters

FilteredRelation was subject to SQL injection in column aliases via control characters, using a suitably crafted dictionary, with dictionary expansion, as the **kwargs passed to QuerySet methods annotate(), aggregate(), extra(), values(), values_list(), and alias().

Thanks to Solomon Kebede for the report.

This issue has severity "high" according to the Django security policy.

CVE-2026-1312: Potential SQL injection via QuerySet.order_by and FilteredRelation

QuerySet.order_by() was subject to SQL injection in column aliases containing periods when the same alias was, using a suitably crafted dictionary, with dictionary expansion, used in FilteredRelation.

Thanks to Solomon Kebede for the report.

This issue has severity "high" according to the Django security policy.

Affected supported versions

- Django main

- Django 6.0

- Django 5.2

- Django 4.2

Resolution

Patches to resolve the issue have been applied to Django's main, 6.0, 5.2, and 4.2 branches. The patches may be obtained from the following changesets.

CVE-2025-13473: Username enumeration through timing difference in mod_wsgi authentication handler

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

CVE-2025-14550: Potential denial-of-service vulnerability via repeated headers when using ASGI

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

CVE-2026-1207: Potential SQL injection via raster lookups on PostGIS

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

CVE-2026-1285: Potential denial-of-service vulnerability in django.utils.text.Truncator HTML methods

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

CVE-2026-1287: Potential SQL injection in column aliases via control characters

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

CVE-2026-1312: Potential SQL injection via QuerySet.order_by and FilteredRelation

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

The following releases have been issued

- Django 6.0.2 (download Django 6.0.2 | 6.0.2 checksums)

- Django 5.2.11 (download Django 5.2.11 | 5.2.11 checksums)

- Django 4.2.28 (download Django 4.2.28 | 4.2.28 checksums)

The PGP key ID used for this release is Jacob Walls: 131403F4D16D8DC7

General notes regarding security reporting

As always, we ask that potential security issues be reported via private email to security@djangoproject.com, and not via Django's Trac instance, nor via the Django Forum. Please see our security policies for further information.

February 03, 2026 02:13 PM UTC

Real Python

Getting Started With Google Gemini CLI

This video course will teach you how to use Gemini CLI to bring Google’s AI-powered coding assistance directly into your terminal. After you authenticate with your Google account, this tool will be ready to help you analyze code, identify bugs, and suggest fixes—all without leaving your familiar development environment.

Imagine debugging code without switching between your console and browser, or picture getting instant explanations for unfamiliar projects. Like other command-line AI assistants, Google’s Gemini CLI brings AI-powered coding assistance directly into your command line, allowing you to stay focused in your development workflow.

Whether you’re troubleshooting a stubborn bug, understanding legacy code, or generating documentation, this tool acts as an intelligent pair-programming partner that understands your codebase’s context.

You’re about to install Gemini CLI, authenticate with Google’s free tier, and put it to work on an actual Python project. You’ll discover how natural language queries can help you understand code faster and catch bugs that might slip past manual review.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

February 03, 2026 02:00 PM UTC

PyBites

Coding can be super lonely

I hate coding solo.

Not in the moment or when I’m in the zone, I mean in the long run.

I love getting into that deep focus where I’m locked in and hours pass by in a second!

But I hate snapping out of it and not having anyone to chat with about it. (I’m lucky that’s not the case anymore though – thanks Bob!)

So it’s no surprise that many of the devs I chat with on Zoom calls or in person share the same sentiment.

Not everyone has a Bob though. Many people don’t have anyone in their circle that they can talk to about code.

- No one to share the hardships with.

- No one to troubleshoot problems with.

- No one to ask for a code review or feedback.

- No one to learn from experience with.

It can be a lonely experience.

And just as bad, it leads to stagnation. You can spend years coding in a silo and feel like you haven’t grown at all. That feeling of being a junior dev becomes unshakable.

When you work in isolation, you’re operating in a vacuum. Without external input, your vacuum becomes an echo chamber.

- Your bad habits become baked in.

- You don’t learn new ways of doing things (no new tricks!)

- And worse of all – you have no idea you’re even doing it.

As funny as it sounds, as devs I think we all need other devs around us who will create friction. Without the friction of other developers looking at your work, you don’t grow.

Some of my most memorable learning experiences in my first dev job were with my colleague, sharing ideas on a whiteboard and talking through code. (Thanks El!)

If you haven’t had the experience of this kind of community and support, then you’re missing out. Here’s what I want you to do this week:

- Go seek out a Code Review: Find someone more senior than you and ask them to give you their two cents on your coding logic. Note I’m suggesting logic and not your syntax. Let’s target your thought process!

- Build for Someone Else: Go build a tool for a colleague or a friend. The second another person uses your code it breaks the cycle/vacuum because you’re now accountable for the bugs, suggestions and UX.

- Public Accountability: Join our community, tell us what you’re going to build and document your progress! If no one is watching, it’s too easy to quit when the engineering gets hard (believe me, I know!).

At the end of the day, you don’t become a Senior Developer and break through to the next level of your Python journey by typing in a dark room alone (as enjoyable as that may be sometimes  )

)

You become one by engaging with the community, sharing what you’re doing and learning from others.

If you’re stuck in a vacuum, join the community, reply to my welcome DM, and check out our community calendar.

- Sign up for our Accountability Sessions.

- Keep an eye out for Live Sessions Bob and I are hosting every couple of weeks

Julian

This was originally sent to our email list. Join here.

February 03, 2026 11:02 AM UTC

Python Bytes

#468 A bolt of Django

<strong>Topics covered in this episode:</strong><br> <ul> <li><strong><a href="https://github.com/FarhanAliRaza/django-bolt?featured_on=pythonbytes">django-bolt: Faster than FastAPI, but with Django ORM, Django Admin, and Django packages</a></strong></li> <li><strong><a href="https://github.com/deepankarm/pyleak?featured_on=pythonbytes">pyleak</a></strong></li> <li><strong>More Django (three articles)</strong></li> <li><strong><a href="https://data-star.dev?featured_on=pythonbytes">Datastar</a></strong></li> <li><strong>Extras</strong></li> <li><strong>Joke</strong></li> </ul><a href='https://www.youtube.com/watch?v=DhfAWhLrT78' style='font-weight: bold;'data-umami-event="Livestream-Past" data-umami-event-episode="468">Watch on YouTube</a><br> <p><strong>About the show</strong></p> <p>Sponsored by us! Support our work through:</p> <ul> <li>Our <a href="https://training.talkpython.fm/?featured_on=pythonbytes"><strong>courses at Talk Python Training</strong></a></li> <li><a href="https://courses.pythontest.com/p/the-complete-pytest-course?featured_on=pythonbytes"><strong>The Complete pytest Course</strong></a></li> <li><a href="https://www.patreon.com/pythonbytes"><strong>Patreon Supporters</strong></a></li> </ul> <p><strong>Connect with the hosts</strong></p> <ul> <li>Michael: <a href="https://fosstodon.org/@mkennedy">@mkennedy@fosstodon.org</a> / <a href="https://bsky.app/profile/mkennedy.codes?featured_on=pythonbytes">@mkennedy.codes</a> (bsky)</li> <li>Brian: <a href="https://fosstodon.org/@brianokken">@brianokken@fosstodon.org</a> / <a href="https://bsky.app/profile/brianokken.bsky.social?featured_on=pythonbytes">@brianokken.bsky.social</a></li> <li>Show: <a href="https://fosstodon.org/@pythonbytes">@pythonbytes@fosstodon.org</a> / <a href="https://bsky.app/profile/pythonbytes.fm">@pythonbytes.fm</a> (bsky)</li> </ul> <p>Join us on YouTube at <a href="https://pythonbytes.fm/stream/live"><strong>pythonbytes.fm/live</strong></a> to be part of the audience. Usually <strong>Monday</strong> at 11am PT. Older video versions available there too.</p> <p>Finally, if you want an artisanal, hand-crafted digest of every week of the show notes in email form? Add your name and email to <a href="https://pythonbytes.fm/friends-of-the-show">our friends of the show list</a>, we'll never share it.</p> <p><strong>Brian #1: <a href="https://github.com/FarhanAliRaza/django-bolt?featured_on=pythonbytes">django-bolt : Faster than FastAPI, but with Django ORM, Django Admin, and Django packages</a></strong></p> <ul> <li>Farhan Ali Raza</li> <li>High-Performance Fully Typed API Framework for Django</li> <li>Inspired by DRF, FastAPI, Litestar, and Robyn</li> <li><a href="https://bolt.farhana.li?featured_on=pythonbytes">Django-Bolt docs</a></li> <li><a href="https://djangochat.com/episodes/building-a-django-api-framework-faster-than-fastapi?featured_on=pythonbytes">Interview with Farhan on Django Chat Podcast</a></li> <li><a href="https://www.youtube.com/watch?v=Pukr-fT4MFY">And a walkthrough video</a></li> </ul> <p><strong>Michael #2: <a href="https://github.com/deepankarm/pyleak?featured_on=pythonbytes">pyleak</a></strong></p> <ul> <li>Detect leaked asyncio tasks, threads, and event loop blocking with stack trace in Python. Inspired by goleak.</li> <li>Has patterns for <ul> <li>Context managers</li> <li>decorators</li> </ul></li> <li>Checks for <ul> <li>Unawaited asyncio tasks</li> <li>Threads</li> <li>Blocking of an asyncio loop</li> <li>Includes a pytest plugin so you can do <code>@pytest.mark.no_leaks</code></li> </ul></li> </ul> <p><strong>Brian #3: More Django (three articles)</strong></p> <ul> <li><a href="https://paultraylor.net/blog/2026/migrating-from-celery-to-django-tasks/?featured_on=pythonbytes"><strong>Migrating From Celery to Django Tasks</strong></a> <ul> <li>Paul Taylor</li> <li>Nice intro of how easy it is to get started with Django Tasks</li> </ul></li> <li><a href="https://jvns.ca/blog/2026/01/27/some-notes-on-starting-to-use-django/?featured_on=pythonbytes">Some notes on starting to use Django</a> <ul> <li>Julia Evans</li> <li>A handful of reasons why Django is a great choice for a web framework <ul> <li>less magic than Rails</li> <li>a built-in admin</li> <li>nice ORM</li> <li>automatic migrations</li> <li>nice docs</li> <li>you can use sqlite in production</li> <li>built in email</li> </ul></li> <li><a href="https://alldjango.com/articles/definitive-guide-to-using-django-sqlite-in-production?featured_on=pythonbytes">The definitive guide to using Django with SQLite in production</a> <ul> <li>I’m gonna have to study this a bit.</li> <li>The conclusion states one of the benefits is “reduced complexity”, but, it still seems like quite a bit to me.</li> </ul></li> </ul></li> </ul> <p><strong>Michael #4: <a href="https://data-star.dev?featured_on=pythonbytes">Datastar</a></strong></p> <ul> <li><p>Sent to us by Forrest Lanier</p></li> <li><p>Lots of work by Chris May</p></li> <li><p>Out <a href="https://talkpython.fm/episodes/all?featured_on=pythonbytes">on Talk Python</a> soon.</p></li> <li><p><a href="https://github.com/starfederation/datastar-python?featured_on=pythonbytes">Official Datastar Python SDK</a></p></li> <li><p>Datastar is a little like HTMX, but</p> <ul> <li><p>The single source of truth is your server</p></li> <li><p>Events can be sent from server automatically (using SSE)</p> <ul> <li>e.g <div class="codehilite"> <pre><span></span><code><span class="k">yield</span> <span class="n">SSE</span><span class="o">.</span><span class="n">patch_elements</span><span class="p">(</span> <span class="sa">f</span><span class="s2">"""</span><span class="si">{</span><span class="p">(</span><span class="err">#</span><span class="n">HTML</span><span class="err">#</span><span class="p">)</span><span class="si">}{</span><span class="n">datetime</span><span class="o">.</span><span class="n">now</span><span class="p">()</span><span class="o">.</span><span class="n">isoformat</span><span class="p">()</span><span class="si">}</span><span class="s2">"""</span> <span class="p">)</span> </code></pre> </div></li> </ul></li> </ul></li> <li><p><a href="https://everydaysuperpowers.dev/articles/why-i-switched-from-htmx-to-datastar/?featured_on=pythonbytes">Why I switched from HTMX to Datastar</a> article</p></li> </ul> <p><strong>Extras</strong></p> <p>Brian:</p> <ul> <li><a href="https://djangochat.com/episodes/inverting-the-testing-pyramid-brian-okken?featured_on=pythonbytes">Django Chat: Inverting the Testing Pyramid - Brian Okken</a> <ul> <li>Quite a fun interview</li> </ul></li> <li><a href="https://peps.python.org/pep-0686/?featured_on=pythonbytes">PEP 686 – Make UTF-8 mode default</a> <ul> <li>Now with status “Final” and slated for Python 3.15</li> </ul></li> </ul> <p>Michael:</p> <ul> <li><a href="https://fosstodon.org/@proteusiq/115962026225790683">Prayson Daniel’s Paper tracker</a></li> <li><a href="https://github.com/Dimillian/IceCubesApp?featured_on=pythonbytes">Ice Cubes</a> (open source Mastodon client for macOS)</li> <li><a href="https://github.com/rvben/rumdl-intellij?featured_on=pythonbytes">Rumdl for PyCharm</a>, et. al</li> <li><a href="https://arstechnica.com/security/2026/01/overrun-with-ai-slop-curl-scraps-bug-bounties-to-ensure-intact-mental-health/?featured_on=pythonbytes">cURL Gets Rid of Its Bug Bounty Program Over AI Slop Overrun</a></li> <li><a href="https://surveys.jetbrains.com/s3/python-developers-survey-2026?featured_on=pythonbytes">Python Developers Survey 2026</a></li> </ul> <p><strong>Joke: <a href="https://x.com/PR0GRAMMERHUM0R/status/2015711332947902557?featured_on=pythonbytes">Pushed to prod</a></strong></p>

February 03, 2026 08:00 AM UTC

Daniel Roy Greenfeld

We moved to Manila!

Last year we relocated to Metro Manila, Philippines for the foreseeable future. Audrey's mother is from here, and we wanted our daughter Uma to have the opportunity to spend time with her extended family and experience another line of her heritage.

Where are you living?

In Makati, a city that contains one of the major business districts in Metro Manila. Specifically we're in Salcedo village, a neighboorhood in the CBD, made of towering residential and business buildings with numerous shops, markets, and a few parks. This area allows for a walkable life, which is important to us coming from London.

What about the USA?

The USA is our homeland and we're US citizens. We still have family and friends there. We're hoping to visit the US at least once a year.

What about the UK?

We loved living in London, and have many good friends there. I really enjoyed working for Kraken Tech, but my time came to an end there so our visas were no longer valid. We hope to visit the UK (and the rest of Europe) as tourists, but without the family connection it's harder to justify than trips to the homeland.

What about your daughter?

Uma loves Manila and is in second grade at an international school here in walking distance of our residence. We had looked into getting her into a local public school with a notable science program, but the paperwork required too much lead time. We do like the small class sizes at her current school, and how they accomodate the different learning speeds of students. She will probably stay there for a while.

For extra curricular activities she's enjoying Brazilian Jiu-Jitsu, climbing, yoga, and swimming.

If I'm in Manila can I meet up with you?

Sure! Some options:

- We're long-time members of the Python Philippines community, so you can often find us at their events

- If you train in BJJ, I'm usually at Open Mat Makati quite a bit. Just let me know ahead of time so I can plan around it

- If you want to meet up for coffee, hit me up on social media. Manila is awesome for coffee shops!

February 03, 2026 06:41 AM UTC

February 02, 2026

Real Python

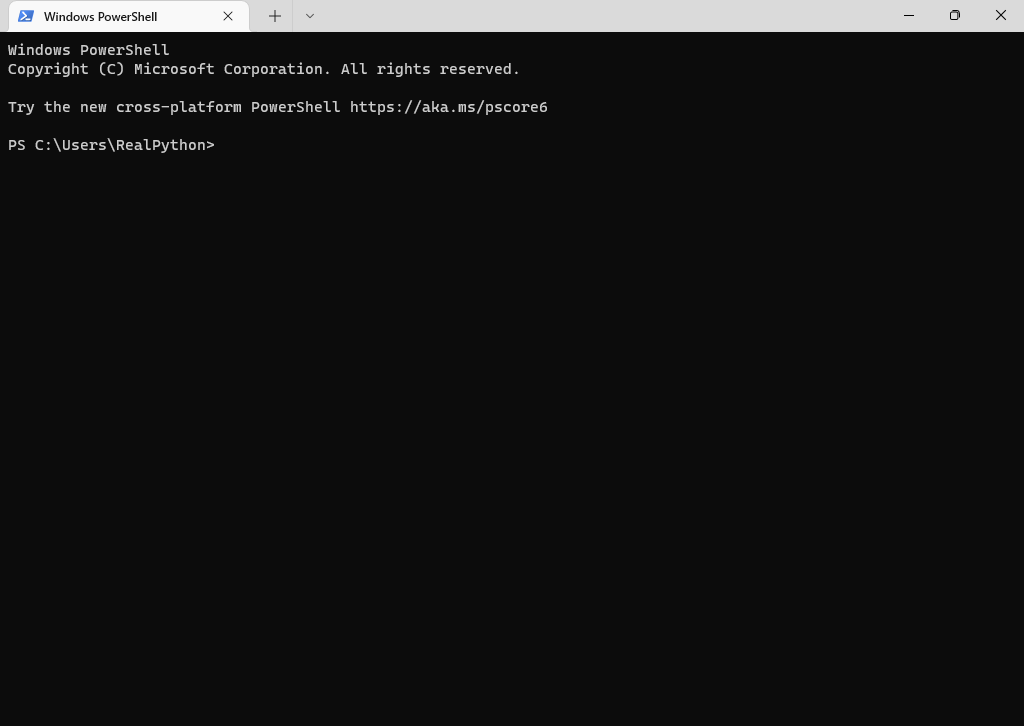

The Terminal: First Steps and Useful Commands for Python Developers

The terminal provides Python developers with direct control over their operating system through text commands. Instead of clicking through menus, you type commands to navigate folders, run scripts, install packages, and manage version control. This command-line approach is faster and more flexible than graphical interfaces for many development tasks.

By the end of this tutorial, you’ll understand that:

- Terminal commands like

cd,ls, andmkdirlet you navigate and organize your file system efficiently - Virtual environments isolate project dependencies, keeping your Python installations clean and manageable

pipinstalls, updates, and removes Python packages directly from the command line- Git commands track changes to your code and create snapshots called commits

- The command prompt displays your current directory and indicates when the terminal is ready for input

This tutorial walks through the fundamentals of terminal usage on Windows, Linux, and macOS. The examples cover file system navigation, creating files and folders, managing packages with pip, and tracking code changes with Git.

Get Your Cheat Sheet: Click here to download a free cheat sheet of useful commands to get you started working with the terminal.

Install and Open the Terminal

Back in the day, the term terminal referred to some clunky hardware that you used to enter data into a computer. Nowadays, people are usually talking about a terminal emulator when they say terminal, and they mean some kind of terminal software that you can find on most modern computers.

Note: There are two other terms that you might hear now and then in combination with the terminal:

- A shell is the program that you interact with when running commands in a terminal.

- A command-line interface (CLI) is a program designed to run in a shell inside the terminal.

In other words, the shell provides the commands that you use in a command-line interface, and the terminal is the application that you run to access the shell.

If you’re using a Linux or macOS machine, then the terminal is already built in. You can start using it right away.

On Windows, you also have access to command-line applications like the Command Prompt. However, for this tutorial and terminal work in general, you should use the Windows terminal application instead.

Read on to learn how to install and open the terminal on Windows and how to find the terminal on Linux and macOS.

Windows

The Windows terminal is a modern and feature-rich application that gives you access to the command line, multiple shells, and advanced customization options. If you have Windows 11 or above, chances are that the Windows terminal is already present on your machine. Otherwise, you can download the application from the Microsoft Store or from the official GitHub repository.

Before continuing with this tutorial, you need to get the terminal working on your Windows computer. You can follow the Your Python Coding Environment on Windows: Setup Guide to learn how to install the Windows terminal.

After you install the Windows terminal, you can find it in the Start menu under Terminal. When you start the application, you should see a window that looks like this:

It can be handy to create a desktop shortcut for the terminal or pin the application to your task bar for easier access.

Linux

You can find the terminal application in the application menu of your Linux distribution. Alternatively, you can press Ctrl+Alt+T on your keyboard or use the application launcher and search for the word Terminal.

After opening the terminal, you should see a window similar to the screenshot below:

How you open the terminal may also depend on which Linux distribution you’re using. Each one has a different way of doing it. If you have trouble opening the terminal on Linux, then the Real Python community will help you out in the comments below.

macOS

A common way to open the terminal application on macOS is by opening the Spotlight Search and searching for Terminal. You can also find the terminal app in the application folder inside Finder.

When you open the terminal, you see a window that looks similar to the image below:

Read the full article at https://realpython.com/terminal-commands/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

February 02, 2026 02:00 PM UTC

February 01, 2026

Graham Dumpleton

Developer Advocacy in 2026

I got into developer advocacy in 2010 at New Relic, followed by a stint at Red Hat. When I moved to VMware, I expected things to continue much as before, but COVID disrupted those plans. When Broadcom acquired VMware, the writing was on the wall and though it took a while, I eventually got made redundant. That was almost 18 months ago. In the time since, I've taken an extended break with overseas travel and thoughts of early retirement. It's been a while therefore since I've done any direct developer advocacy.

One thing became clear during that time. I had no interest in returning to a 9-to-5 programming job in an office, working on some dull internal system. Ideally, I'd have found a company genuinely committed to open source where I could contribute to open source projects. But those opportunities are thin on the ground, and being based in Australia made it worse as such companies are typically in the US or Europe and rarely hire outside their own region.

Recently I've been thinking about getting back into developer advocacy. The job market makes this a difficult proposition though. Companies based in the US and Europe that might otherwise be good places to work tend to ignore the APAC region, and even when they do pay attention, they rarely maintain a local presence. They just send people out when they need to.

Despite the difficulties, I would also need to understand what I was getting myself into. How much had developer advocacy changed since I was doing it? What challenges would I face working in that space?

So I did what any sensible person does in 2026. I asked an AI to help me research the current state of the field. I started with broad questions across different topics, but one question stood out as a interesting starting point: What are the major forces that have reshaped developer advocacy in recent years?

This post looks at what the AI said and how it matched my own impressions.

Catching Up: What's Changed?

The AI came back with three main themes.

Force 1: AI Has Changed Everything

What the AI told me:

The data suggests a fundamental shift in how developers work. Around 84% of developers now use AI tools on a daily basis, with more than half relying on them for core development tasks. Developers are reporting 30-60% time savings on things like boilerplate generation, debugging, documentation lookup, and testing.

This has significant implications for developer advocacy. The traditional path—developer has a problem, searches Google, lands on Stack Overflow or your documentation, reads a tutorial—has been disrupted. Now, developers increasingly turn to AI assistants first. They describe their problem and get an immediate, contextual answer, often with working code included.