Planet Python

Last update: March 04, 2026 07:43 AM UTC

March 04, 2026

Glyph Lefkowitz

What Is Code Review For?

Humans Are Bad At Perceiving

Humans are not particularly good at catching bugs. For one thing, we get tired easily. There is some science on this, indicating that humans can’t even maintain enough concentration to review more than about 400 lines of code at a time..

We have existing terms of art, in various fields, for the ways in which the human perceptual system fails to register stimuli. Perception fails when humans are distracted, tired, overloaded, or merely improperly engaged.

Each of these has implications for the fundamental limitations of code review as an engineering practice:

-

Inattentional Blindness: you won’t be able to reliably find bugs that you’re not looking for.

-

Repetition Blindness: you won’t be able to reliably find bugs that you are looking for, if they keep occurring.

-

Vigilance Fatigue: you won’t be able to reliably find either kind of bugs, if you have to keep being alert to the presence of bugs all the time.

-

and, of course, the distinct but related Alert Fatigue: you won’t even be able to reliably evaluate reports of possible bugs, if there are too many false positives.

Never Send A Human To Do A Machine’s Job

When you need to catch a category of error in your code reliably, you will need a deterministic tool to evaluate — and, thanks to our old friend “alert fatigue” above — ideally, to also remedy that type of error. These tools will relieve the need for a human to make the same repetitive checks over and over. None of them are perfect, but:

- to catch logical errors, use automated tests.

- to catch formatting errors, use autoformatters.

- to catch common mistakes, use linters.

- to catch common security problems, use a security scanner.

Don’t blame reviewers for missing these things.

Code review should not be how you catch bugs.

What Is Code Review For, Then?

Code review is for three things.

First, code review is for catching process failures. If a reviewer has noticed a few bugs of the same type in code review, that’s a sign that that type of bug is probably getting through review more often than it’s getting caught. Which means it’s time to figure out a way to deploy a tool or a test into CI that will reliably prevent that class of error, without requiring reviewers to be vigilant to it any more.

Second — and this is actually its more important purpose — code review is a tool for acculturation. Even if you already have good tools, good processes, and good documentation, new members of the team won’t necessarily know about those things. Code review is an opportunity for older members of the team to introduce newer ones to existing tools, patterns, or areas of responsibility. If you’re building an observer pattern, you might not realize that the codebase you’re working in already has an existing idiom for doing that, so you wouldn’t even think to search for it, but someone else who has worked more with the code might know about it and help you avoid repetition.

You will notice that I carefully avoided saying “junior” or “senior” in that paragraph. Sometimes the newer team member is actually more senior. But also, the acculturation goes both ways. This is the third thing that code review is for: disrupting your team’s culture and avoiding stagnation. If you have new talent, a fresh perspective can also be an extremely valuable tool for building a healthy culture. If you’re new to a team and trying to build something with an observer pattern, and this codebase has no tools for that, but your last job did, and it used one from an open source library, that is a good thing to point out in a review as well. It’s an opportunity to spot areas for improvement to culture, as much as it is to spot areas for improvement to process.

Thus, code review should be as hierarchically flat as possible. If the goal of code review were to spot bugs, it would make sense to reserve the ability to review code to only the most senior, detail-oriented, rigorous engineers in the organization. But most teams already know that that’s a recipe for brittleness, stagnation and bottlenecks. Thus, even though we know that not everyone on the team will be equally good at spotting bugs, it is very common in most teams to allow anyone past some fairly low minimum seniority bar to do reviews, often as low as “everyone on the team who has finished onboarding”.

Oops, Surprise, This Post Is Actually About LLMs Again

Sigh. I’m as disappointed as you are, but there are no two ways about it: LLM code generators are everywhere now, and we need to talk about how to deal with them. Thus, an important corollary of this understanding that code review is a social activity, is that LLMs are not social actors, thus you cannot rely on code review to inspect their output.

My own personal preference would be to eschew their use entirely, but in the spirit of harm reduction, if you’re going to use LLMs to generate code, you need to remember the ways in which LLMs are not like human beings.

When you relate to a human colleague, you will expect that:

- you can make decisions about what to focus on based on their level of experience and areas of expertise to know what problems to focus on; from a late-career colleague you might be looking for bad habits held over from legacy programming languages; from an earlier-career colleague you might be focused more on logical test-coverage gaps,

- and, they will learn from repeated interactions so that you can gradually focus less on a specific type of problem once you have seen that they’ve learned how to address it,

With an LLM, by contrast, while errors can certainly be biased a bit by the prompt from the engineer and pre-prompts that might exist in the repository, the types of errors that the LLM will make are somewhat more uniformly distributed across the experience range.

You will still find supposedly extremely sophisticated LLMs making extremely common mistakes, specifically because they are common, and thus appear frequently in the training data.

The LLM also can’t really learn. An intuitive response to this problem is to simply continue adding more and more instructions to its pre-prompt, treating that text file as its “memory”, but that just doesn’t work, and probably never will. The problem — “context rot” is somewhat fundamental to the nature of the technology.

Thus, code-generators must be treated more adversarially than you would a human code review partner. When you notice it making errors, you always have to add tests to a mechanical, deterministic harness that will evaluates the code, because the LLM cannot meaningfully learn from its mistakes outside a very small context window in the way that a human would, so giving it feedback is unhelpful. Asking it to just generate the code again still requires you to review it all again, and as we have previously learned, you, a human, cannot review more than 400 lines at once.

To Sum Up

Code review is a social process, and you should treat it as such. When you’re reviewing code from humans, share knowledge and encouragement as much as you share bugs or unmet technical requirements.

If you must reviewing code from an LLM, strengthen your automated code-quality verification tooling and make sure that its agentic loop will fail on its own when those quality checks fail immediately next time. Do not fall into the trap of appealing to its feelings, knowledge, or experience, because it doesn’t have any of those things.

But for both humans and LLMs, do not fall into the trap of thinking that your code review process is catching your bugs. That’s not its job.

Acknowledgments

Thank you to my patrons who are supporting my writing on this blog. If you like what you’ve read here and you’d like to read more of it, or you’d like to support my various open-source endeavors, you can support my work as a sponsor!

March 03, 2026

PyCoder’s Weekly

Issue #724: Unit Testing Performance, Ordering, FastAPI, and More (March 3, 2026)

#724 – MARCH 3, 2026

View in Browser »

Unit Testing: Catching Speed Changes

This second post in a series covers how to use unit testing to ensure the performance of your code. This post talks about catching differences in performance after code has changed.

ITAMAR TURNER-TRAURING

Lexicographical Ordering in Python

Python lexicographically orders tuples, strings, and all other sequences, comparing element-by-element. Learn what this means when you compare values or sort.

TREY HUNNER

A Cheaper Heroku? See for Yourself

Is PaaS too expensive for your Django app? We built a comparison calculator that puts the fully-managed hosting options head-to-head →

JUDOSCALE sponsor

Start Building With FastAPI

Learn how to build APIs with FastAPI in Python, including Pydantic models, HTTP methods, CRUD operations, and interactive documentation.

REAL PYTHON course

Python Jobs

Python + AI Content Specialist (Anywhere)

Articles & Tutorials

Serving Private Files With Django and S3

Django’s FileField and ImageField are good at storing files, but on their own they don’t let you control access. Serving files from S3 just makes this more complicated. Learn how to secure a file behind your login wall.

RICHARD TERRY

FastAPI Error Handling: Types, Methods, and Best Practices

FastAPI provides various error-handling mechanisms to help you build robust applications. With built-in validation models, exceptions, and custom exception handlers, you can build robust and scalable FastAPI applications.

HONEYBADGER.IO • Shared by Addison Curtis

CLI Subcommands With Lazy Imports

Python 3.15 will support lazy imports, meaning modules don’t get pulled in until they are needed. This can be particularly useful with Command Line Interfaces where a subcommand doesn’t need everything to be useful.

BRETT CANNON

How the Self-Driving Tech Stack Works

A technical guide to how self-driving cars actually work. CAN bus protocols, neural networks, sensor fusion, and control system with open source implementations, most of which can be accessed through Python.

CARDOG

Managing Shared Data Science Code With Git Submodules

Learn how to manage shared code across projects using Git submodules. Prevent version drift, maintain reproducible workflows, and support team collaboration with practical examples.

CODECUT.AI • Shared by Khuyen Tran

Datastar: Modern Web Dev, Simplified

Talk Python interviews Delaney Gillilan, Ben Croker, and Chris May about the Datastar framework, a library that combines the concepts of HTMX, Alpine, and more.

TALK PYTHON podcast

Introducing the Zen of DevOps

Inspired by the Zen of Python, Tibo has written a Zen of DevOps, applying similar ideas from your favorite language to the world of servers and deployment.

TIBO BEIJEN

Stop Using Pickle Already. Seriously, Stop It!

Python’s Pickle is insecure by design, so using it in public facing code is highly problematic. This article explains why and suggests alternatives.

MICHAL NAZAREWICZ

Raw+DC: The ORM Pattern of 2026?

After 25+ years championing ORMs, Michael has switched to raw database queries paired with Python dataclasses. This post explains why.

MICHAEL KENNEDY

Projects & Code

Events

Weekly Real Python Office Hours Q&A (Virtual)

March 4, 2026

REALPYTHON.COM

Python Unplugged on PyTV

March 4 to March 5, 2026

JETBRAINS.COM

Canberra Python Meetup

March 5, 2026

MEETUP.COM

Sydney Python User Group (SyPy)

March 5, 2026

SYPY.ORG

PyDelhi User Group Meetup

March 7, 2026

MEETUP.COM

PyConf Hyderabad 2026

March 14 to March 16, 2026

PYCONFHYD.ORG

Happy Pythoning!

This was PyCoder’s Weekly Issue #724.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

Rodrigo Girão Serrão

TIL #140 – Install Jupyter with uv

Today I learned how to install jupyter properly while using uv to manage tools.

Running a Jupyter notebook server or Jupyter lab

To run a Jupyter notebook server with uv, you can run the command

$ uvx jupyter notebookSimilarly, if you want to run Jupyter lab, you can run

$ uvx jupyter labBoth work, but uv will kindly present a message explaining how it's actually doing you a favour, because it guessed what you wanted.

That's because uvx something usually looks for a package named “something” with a command called “something”.

As it turns out, the command jupyter comes from the package jupyter-core, not from the package jupyter.

Installing Jupyter

If you're running Jupyter notebooks often, you can install the notebook server and Jupyter lab with

$ uv tool install --with jupyter jupyter-coreWhy uv tool install jupyter fails

Running uv tool install jupyter fails because the package jupyter doesn't provide any commands by itself.

Why uv tool install jupyter-core doesn't work

The command uv tool install jupyter-core looks like it works because it installs the command jupyter correctly.

However, if you use --help you can see that you don't have access to the subcommands you need:

$ uv tool install jupyter-core

...

Installed 3 executables: jupyter, jupyter-migrate, jupyter-troubleshoot

$ jupyter --help

...

Available subcommands: book migrate troubleshootThat's because the subcommands notebook and lab are from the package jupyter.

The solution?

Install jupyter-core with the additional dependency jupyter, which is what the command uv tool install --with jupyter jupyter-core does.

Other usages of Jupyter

The uv documentation has a page dedicated exclusively to the usage of uv with Jupyter, so check it out for other use cases of the uv and Jupyter combo!

Django Weblog

Django security releases issued: 6.0.3, 5.2.12, and 4.2.29

In accordance with our security release policy, the Django team is issuing releases for Django 6.0.3, Django 5.2.12, and Django 4.2.29. These releases address the security issues detailed below. We encourage all users of Django to upgrade as soon as possible.

CVE-2026-25673: Potential denial-of-service vulnerability in URLField via Unicode normalization on Windows

The django.forms.URLField form field's to_python() method used urllib.parse.urlsplit() to determine whether to prepend a URL scheme to the submitted value. On Windows, urlsplit() performs NFKC normalization (unicodedata.normalize), which can be disproportionately slow for large inputs containing certain characters.

URLField.to_python() now uses a simplified scheme detection, avoiding Unicode normalization entirely and deferring URL validation to the appropriate layers. As a result, while leading and trailing whitespace is still stripped by default, characters such as newlines, tabs, and other control characters within the value are no longer handled by URLField.to_python(). When using the default URLValidator, these values will continue to raise ValidationError during validation, but if you rely on custom validators, ensure they do not depend on the previous behavior of URLField.to_python().

This issue has severity "moderate" according to the Django Security Policy.

Thanks to Seokchan Yoon for the report.

CVE-2026-25674: Potential incorrect permissions on newly created file system objects

Django's file-system storage and file-based cache backends used the process umask to control permissions when creating directories. In multi-threaded environments, one thread's temporary umask change can affect other threads' file and directory creation, resulting in file system objects being created with unintended permissions.

Django now applies the requested permissions via os.chmod() after os.mkdir(), removing the dependency on the process-wide umask.

This issue has severity "low" according to the Django Security Policy.

Thanks to Tarek Nakkouch for the report.

Affected supported versions

- Django main

- Django 6.0

- Django 5.2

- Django 4.2

Resolution

Patches to resolve the issue have been applied to Django's main, 6.0, 5.2, and 4.2 branches. The patches may be obtained from the following changesets.

CVE-2026-25673: Potential denial-of-service vulnerability in URLField via Unicode normalization on Windows

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

CVE-2026-25674: Potential incorrect permissions on newly created file system objects

- On the main branch

- On the 6.0 branch

- On the 5.2 branch

- On the 4.2 branch

The following releases have been issued

- Django 6.0.3 (download Django 6.0.3 | 6.0.3 checksums)

- Django 5.2.12 (download Django 5.2.12 | 5.2.12 checksums)

- Django 4.2.29 (download Django 4.2.29 | 4.2.29 checksums)

The PGP key ID used for this release is Natalia Bidart: 2EE82A8D9470983E

General notes regarding security reporting

As always, we ask that potential security issues be reported via private email to security@djangoproject.com, and not via Django's Trac instance, nor via the Django Forum. Please see our security policies for further information.

Real Python

What Does Python's __init__.py Do?

Python’s special __init__.py file marks a directory as a regular Python package and allows you to import its modules. This file runs automatically the first time you import its containing package. You can use it to initialize package-level variables, define functions or classes, and structure the package’s namespace clearly for users.

By the end of this video course, you’ll understand that:

- A directory without an

__init__.pyfile becomes a namespace package, which behaves differently from a regular package and may cause slower imports. - You can use

__init__.pyto explicitly define a package’s public API by importing specific modules or functions into the package namespace. - The Python convention of using leading underscores helps indicate to users which objects are intended as non-public, although this convention can still be bypassed.

- Code inside

__init__.pyruns only once during the first import, even if you run the import statement multiple times.

Understanding how to effectively use __init__.py helps you structure your Python packages in a clear, maintainable way, improving usability and namespace management.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Quiz: Duck Typing in Python: Writing Flexible and Decoupled Code

In this quiz, you’ll test your understanding of Duck Typing in Python: Writing Flexible and Decoupled Code.

By working through this quiz, you’ll revisit what duck typing is and its pros and cons, how Python uses behavior-based interfaces, how protocols and special methods support it, and what alternatives you can use in Python.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

PyBites

Why Building a Production RAG Pipeline is Easier Than You Think

Adding AI to legacy code doesn’t have to be a challenge.

Many devs are hearing this right now: “We need to add AI to the app.”

And for many of them, panic ensues.

The assumption is that you have to rip your existing architecture down to its foundation. You start having nightmares about standing up complex microservices, massive AWS bills, and spending six months learning the intricate math behind vector embeddings.

It feels like a monumental risk to your stable, production-ready codebase, right?

Here’s the current reality though: adding AI to an existing application doesn’t actually require a massive rewrite.

If you have solid software design fundamentals, integrating a Retrieval-Augmented Generation (RAG) pipeline is entirely within your reach.

Here’s how you do it without breaking everything you’ve already built.

Get the Python Stack to do the Heavy Lifting

You don’t need to build your AI pipeline from scratch. The Python ecosystem has matured to the point where the hardest parts of a RAG pipeline are already solved for you.

- Need to parse massive PDFs? Libraries like

doclinghandle it practically out of the box. - Need to convert text into embeddings and store them? You let the LLM provider handle the embedding math, and you drop the results into a vector database.

It’s not a huge technical challenge in Python. It is just an orchestration of existing, powerful tools.

Stop Coding and Start Orchestrating

When a developer builds a RAG pipeline and the AI starts hallucinating, their first instinct is to dive into the code. They try to fix the API calls or mess with the vector search logic.

Take a step back. The code usually isn’t the problem.

The system prompt is the conductor of your entire RAG pipeline. It dictates how the LLM interacts with your vector database. If you’re getting bad results, you don’t need to rewrite your Python logic – you need to refine your prompt through trial and error to strict, data-grounded constraints.

Beat Infrastructure Constraints by Offloading

What if your app is hosted on something lightweight, like Heroku, with strict size and memory limits? You might think you need to containerise everything and migrate to a heavier cloud setup.

Nope! You just need to separate your concerns.

Indexing documents and generating embeddings is heavy work. Querying is light. Offload the heavy lifting (like storing and searching vectors) entirely to your vector database service (like Weaviate). This keeps your core app lightweight, so it only acts as the middleman routing the query.

We Broke Down Exactly How This Works With Tim Gallati

We explored the reality of this architecture with Tim Gallati and Pybites AI coach Juanjo on the podcast. Tim had an existing app, Quiet Links, running on Heroku.

In just six weeks with us, he integrated a massive, production-ready RAG pipeline into it, without breaking his existing user experience.

If you want to hear the full breakdown of how they architected this integration, listen to the episode using the player above, or at the following links:

March 02, 2026

Python Morsels

When are classes used in Python?

While you don't often need to make your own classes in Python, they can sometimes make your code reusable and easier to read.

Using classes because a framework requires it

Unlike many programming languages, you can accomplish quite a bit in Python without ever making a class.

So when are classes typically used?

The most common time to use a class in Python is when using a library or framework that requires writing a class.

For example, users of the Django web framework need to make a class to allow Django to manage their database tables:

from django.db import models

class Product(models.Model):

name = models.CharField(max_length=300)

price = models.DecimalField(max_digits=10, decimal_places=2)

description = models.TextField()

def __str__(self):

return self.name

With Django, each Model class is used for managing one table in the database.

Each instance of every model represents one row in each database table:

>>> from products.models import Product

>>> Product.objects.get(id=1)

<Product: rubber duck>

Many Python programmers create their first class because a library requires them to make one.

Passing the same data into multiple functions

But sometimes you may choose …

Read the full article: https://www.pythonmorsels.com/when-are-classes-used/

Brett Cannon

State of WASI support for CPython: March 2026

It&aposs been a while since I posted about WASI support in CPython! 😅 Up until now, most of the work I have been doing around WASI has been making its maintenance easier for me and other core developers. For instance, the cpython-devcontainer repo now provides a WASI dev container so people don&apost have to install the WASI SDK to be productive (e.g. there&aposs a WASI codespace now so you can work on WASI entirely from your browser without installing anything). All this work around making development easier not only led to having WASI instructions in the devguide and the creation of a CLI app, but also a large expansion of how to use containers to do CPython development.

But the main reason I&aposm blogging today is that PEP 816 was accepted! That PEP defines how WASI compatibility will be handled starting with Python 3.15. The key point is that once the first beta for a Python version is reached, the WASI and WASI SDK versions that will be supported for the life of that Python version will be locked down. That gives the community a target to build packages for since things built with the WASI SDK are not forwards- or backwards-compatible for linking purposes due to wasi-libc not having any compatibility guarantees.

With that PEP out of the way, the next big items on my WASI todo list are (in rough order):

- Implement a subcommand to bundle up a WASI build for distribution

- Write a PEP to define a platform tag for wheels

- Implement a subcommand to build dependencies for CPython (e.g. zlib)

- Turn on socket support (which requires WASI 0.3 and threading support to be released as I&aposm skipping over WASI 0.2)

Rodrigo Girão Serrão

TIL #139 – Multiline input in the REPL

Today I learned how to do multiline input in the REPL using an uncommon combination of arguments for the built-in open.

A while ago I learned I could use open(0) to open standard input.

This unlocks a neat trick that allows you to do multiline input in the REPL:

>>> msg = open(0).read()

Hello,

world!

^D

>>> msg

'Hello,\nworld!\n'The cryptic ^D is Ctrl+D, which means EOF on Unix systems.

If you're on Windows, use Ctrl+Z.

The problem is that if you try to use open(0).read() again to read more multiline input, you get an exception:

OSError: [Errno 9] Bad file descriptorThat's because, when you finished reading the first time around, Python closed the file descriptor 0, so you can no longer use it.

The fix is to set closefd=False when you use the built-in open.

With the parameter closefd set to False, the underlying file descriptor isn't closed and you can reuse it:

>>> msg1 = open(0, closefd=False).read()

Hello,

world!

^D

>>> msg1

'Hello,\nworld!\n'

>>> msg2 = open(0, closefd=False).read()

Goodbye,

world!

^D

>>> msg2

'Goodbye,\nworld!\n'By using open(0, closefd=False), you can read multiline input in the REPL repeatedly.

Real Python

Automate Python Data Analysis With YData Profiling

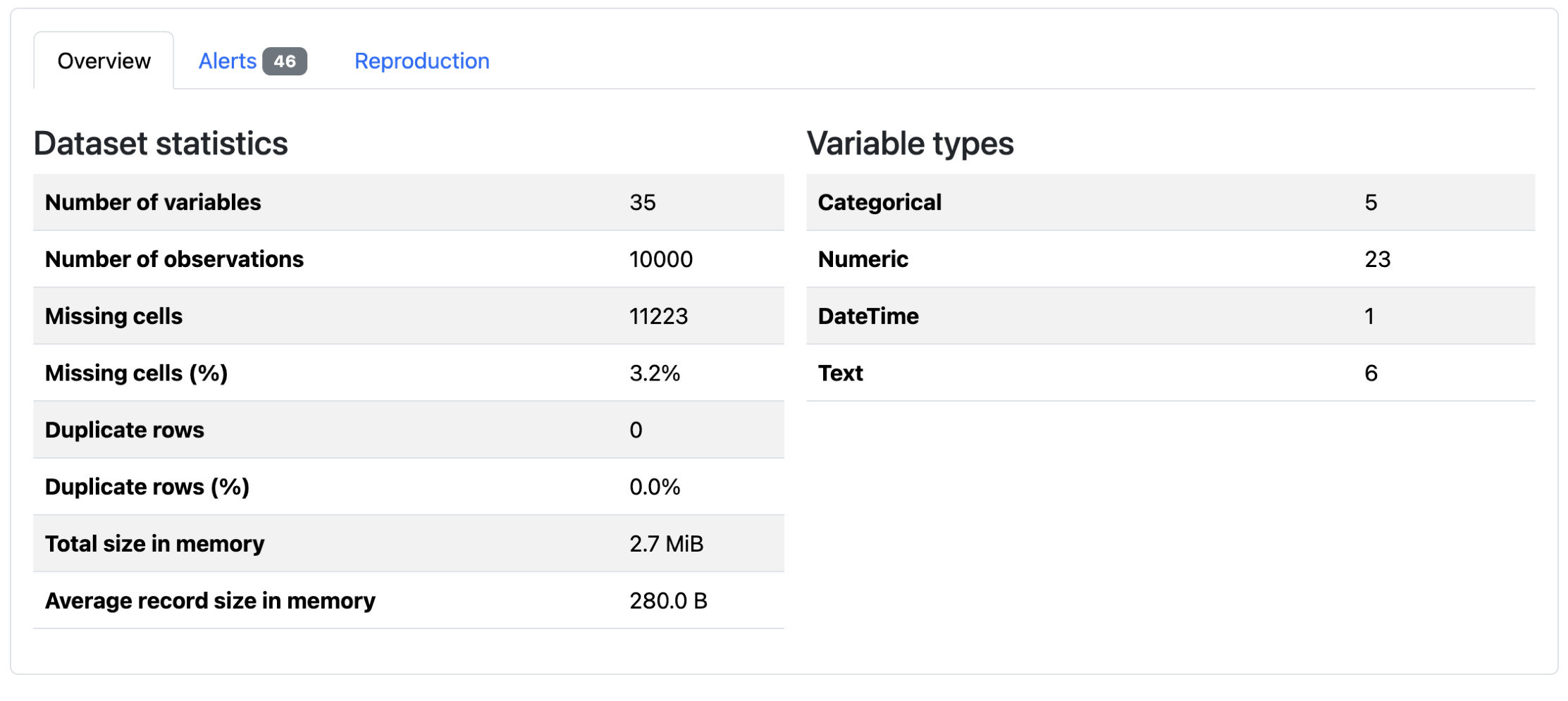

The YData Profiling package generates an exploratory data analysis (EDA) report with a few lines of code. The report provides dataset and column-level analysis, including plots and summary statistics to help you quickly understand your dataset. These reports can be exported to HTML or JSON so you can share them with other stakeholders.

By the end of this tutorial, you’ll understand that:

- YData Profiling generates interactive reports containing EDA results, including summary statistics, visualizations, correlation matrices, and data quality warnings from DataFrames.

ProfileReportcreates a profile you can save with.to_file()for HTML or JSON export, or display inline with.to_notebook_iframe().- Setting

tsmode=Trueand specifying a date column withsortbyenables time series analysis, including stationarity tests and seasonality detection. - The

.compare()method generates side-by-side reports highlighting distribution shifts and statistical differences between datasets.

To get the most out of this tutorial, you’ll benefit from having knowledge of pandas.

Note: The examples in this tutorial were tested using Python 3.13. Additionally, you may need to install setuptools<81 for backward compatibility.

You can install this package using pip:

$ python -m pip install ydata-profiling

Once installed, you’re ready to transform any pandas DataFrame into an interactive report. To follow along, download the example dataset you’ll work with by clicking the link below:

Get Your Code: Click here to download the free sample code and start automating Python data analysis with YData Profiling.

The following example generates a profiling report from the 2024 flight delay dataset and saves it to disk:

flight_report.py

import pandas as pd

from ydata_profiling import ProfileReport

df = pd.read_csv("flight_data_2024_sample.csv")

profile = ProfileReport(df)

profile.to_file("flight_report.html")

This code generates an HTML file containing interactive visualizations, statistical summaries, and data quality warnings:

You can open the file in any browser to explore your data’s characteristics without writing additional analysis code.

There are a number of tools available for high-level dataset exploration, but not all are built for the same purpose. The following table highlights a few common options and when each one is a good fit:

| Use case | Pick | Best for |

|---|---|---|

| You want to quickly generate an exploratory report | ydata-profiling |

Generating exploratory data analysis reports with visualizations |

| You want an overview of a large dataset | skimpy or df.describe() |

Providing fast, lightweight summaries in the console |

| You want to enforce data quality | pandera |

Validating schemas and catching errors in data pipelines |

Overall, YData Profiling is best used as an exploratory report creation tool. If you’re looking to generate an overview for a large dataset, using SkimPy or a built-in DataFrame library method may be more efficient. Other tools, like Pandera, are more appropriate for data validation.

If YData Profiling looks like the right choice for your use case, then keep reading to learn about its most important features.

Building a Report With YData Profiling

A YData Profiling report is composed of several sections that summarize different aspects of your dataset. Before customizing a report, it helps to understand the main components it includes and what each one is designed to show.

Read the full article at https://realpython.com/ydata-profiling-eda/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Rodrigo Girão Serrão

Remove extra spaces

Learn how to remove extra spaces from a string using regex, string splitting, a fixed point, and itertools.groupby.

In this article you'll learn about three different ways in which you can remove extra spaces from the middle of a string. That is, you'll learn how to go from a string like

string = "This is a perfectly normal sentence."to a string like

string = "This is a perfectly normal sentence."The best solution to remove extra spaces from a string

The best solution for this task, which is both readable and performant, uses the regex module re:

import re

def remove_extra_spaces(string):

return re.sub(" {2,}", " ", string)The function sub can be used to substitute a pattern for a replacement you specify.

The pattern " {2,}" finds runs of 2 or more consecutive spaces and replaces them with a single space.

String splitting

Using the string method split can also be a good approach:

def remove_extra_spaces(string):

return " ".join(string.split(" "))If you're using string splitting, you'll want to provide the space " " as an argument.

If you call split with no arguments, you'll be splitting on all whitespace, which is not what you want if you have newlines and other whitespace characters you should preserve.

This solution is great, except it doesn't work:

print(remove_extra_spaces(string))

# 'This is a perfectly normal sentence.'The problem is that splitting on the space will produce a list with empty strings:

print(string.split(" "))

# ['This', 'is', '', 'a', '', '', 'perfectly', '', '', '', 'normal', '', '', '', '', 'sentence.']These empty strings will be joined back together and you'll end up with the same string you started with. For this to work, you'll have to filter the empty strings first:

def remove_extra_spaces(string):

return " ".join(filter(None, string.split(" ")))Using filter(None, ...) filters out the Falsy strings, so that the final joining operation only joins the strings that matter.

This solution has a problem, though, in that it will completely remove any leading or trailing whitespace, which may or may not be a problem.

The two solutions presented so far — using regular expressions and string splitting — are pretty reasonable. But they're also boring. You'll now learn about two other solutions.

Replacing spaces until you hit a fixed point

You can think about the task of removing extra spaces as the task of replacing extra spaces by the empty string.

And if you think about doing string replacements, you should think about the string method replace.

You can't do something like string.replace(" ", ""), otherwise you'd remove all spaces, so you have to be a bit more careful:

def remove_extra_spaces(string):

while True:

new_string = string.replace(" ", " ")

if new_string == string:

break

string = new_string

return stringYou can replace two consecutive spaces by a single space, and you repeat this operation until nothing changes in your string.

The idea of running a function until its output doesn't change is common enough in maths that they call...

Real Python

Quiz: The pandas DataFrame: Make Working With Data Delightful

In this quiz, you’ll test your understanding of the pandas DataFrame.

By working through this quiz, you’ll review how to create pandas DataFrames, access and modify columns, insert and sort data, extract values as NumPy arrays, and how pandas handles missing data.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Python Bytes

#471 The ORM pattern of 2026?

<strong>Topics covered in this episode:</strong><br> <ul> <li><strong><a href="https://mkennedy.codes/posts/raw-dc-the-orm-pattern-of-2026/?featured_on=pythonbytes">Raw+DC: The ORM pattern of 2026</a>?</strong></li> <li><strong><a href="https://github.com/okken/pytest-check/releases?featured_on=pythonbytes">pytest-check releases</a></strong></li> <li><strong><a href="https://dcw.ritviknag.com/en/latest/#">Dataclass Wizard</a></strong></li> <li><strong><a href="https://github.com/adamghill/sqliteo?featured_on=pythonbytes">SQLiteo</a> - “native macOS SQLite browser built for normal people”</strong></li> <li><strong>Extras</strong></li> <li><strong>Joke</strong></li> </ul><a href='https://www.youtube.com/watch?v=tZyf7KtTQVU' style='font-weight: bold;'data-umami-event="Livestream-Past" data-umami-event-episode="471">Watch on YouTube</a><br> <p><strong>About the show</strong></p> <p>Sponsored by us! Support our work through:</p> <ul> <li>Our <a href="https://training.talkpython.fm/?featured_on=pythonbytes"><strong>courses at Talk Python Training</strong></a></li> <li><a href="https://courses.pythontest.com/p/the-complete-pytest-course?featured_on=pythonbytes"><strong>The Complete pytest Course</strong></a></li> <li><a href="https://www.patreon.com/pythonbytes"><strong>Patreon Supporters</strong></a> <strong>Connect with the hosts</strong></li> <li>Michael: <a href="https://fosstodon.org/@mkennedy">@mkennedy@fosstodon.org</a> / <a href="https://bsky.app/profile/mkennedy.codes?featured_on=pythonbytes">@mkennedy.codes</a> (bsky)</li> <li>Brian: <a href="https://fosstodon.org/@brianokken">@brianokken@fosstodon.org</a> / <a href="https://bsky.app/profile/brianokken.bsky.social?featured_on=pythonbytes">@brianokken.bsky.social</a></li> <li>Show: <a href="https://fosstodon.org/@pythonbytes">@pythonbytes@fosstodon.org</a> / <a href="https://bsky.app/profile/pythonbytes.fm">@pythonbytes.fm</a> (bsky) Join us on YouTube at <a href="https://pythonbytes.fm/stream/live"><strong>pythonbytes.fm/live</strong></a> to be part of the audience. Usually <strong>Monday</strong> at 11am PT. Older video versions available there too. Finally, if you want an artisanal, hand-crafted digest of every week of the show notes in email form? Add your name and email to <a href="https://pythonbytes.fm/friends-of-the-show">our friends of the show list</a>, we'll never share it.</li> </ul> <p><strong>Michael #1: <a href="https://mkennedy.codes/posts/raw-dc-the-orm-pattern-of-2026/?featured_on=pythonbytes">Raw+DC: The ORM pattern of 2026</a>?</strong></p> <ul> <li>ORMs/ODMs provide great support and abstractions for developers</li> <li>They are not the <em>native</em> language of agentic AI</li> <li>Raw queries are trained 100x+ more than standard ORMs</li> <li>Using raw queries at the data access optimizes for AI coding</li> <li>Returning some sort of object mapped to the data optimizes for type safety and devs</li> </ul> <p><strong>Brian #2: <a href="https://github.com/okken/pytest-check/releases?featured_on=pythonbytes">pytest-check releases</a></strong></p> <ul> <li>3 merged pull requests</li> <li>8 closed issues</li> <li>at one point got to 0 PR’s and 1 enhancement request</li> <li>Now back to 2 issues and 1 PR, but activity means it’s still alive and being used. so cool</li> <li>Check out <a href="https://github.com/okken/pytest-check/blob/main/changelog.md?featured_on=pythonbytes">changelog</a> for all mods</li> <li>A lot of changes around supporting mypy <ul> <li>I’ve decided to NOT have the examples be fully <code>--strict</code> as I find it reduces readability <ul> <li>See <code>tox.ini</code> for explanation</li> </ul></li> <li>But src is <code>--strict</code> clean now, so user tests can be <code>--strict</code> clean.</li> </ul></li> </ul> <p><strong>Michael #3: <a href="https://dcw.ritviknag.com/en/latest/#">Dataclass Wizard</a></strong></p> <ul> <li><strong>Simple, elegant wizarding tools for Python’s</strong> <code>dataclasses</code>.</li> <li>Features <ul> <li>🚀 Fast — code-generated loaders and dumpers</li> <li>🪶 Lightweight — pure Python, minimal dependencies</li> <li>🧠 Typed — powered by Python type hints</li> <li>🧙 Flexible — JSON, YAML, TOML, and environment variables</li> <li>🧪 Reliable — battle-tested with extensive test coverage</li> </ul></li> <li><a href="https://dcw.ritviknag.com/en/latest/#no-inheritance-needed">No Inheritance Needed</a></li> </ul> <p><strong>Brian #4: <a href="https://github.com/adamghill/sqliteo?featured_on=pythonbytes">SQLiteo</a> - “native macOS SQLite browser built for normal people”</strong></p> <ul> <li>Adam Hill</li> <li>This is a fun tool, built by someone I trust.</li> <li>That trust part is something I’m thinking about a lot in these days of dev+agent built tools</li> <li>Some notes on my thoughts when evaluating <ul> <li>I know mac rules around installing .dmg files not from the apple store are picky. <ul> <li>And I like that</li> </ul></li> <li>But I’m ok with the override when something comes from a dev I trust</li> <li>The contributors are all Adam <ul> <li>I’m still not sure how I feel about letting agents do commits in repos</li> </ul></li> <li>There’s “AGENTS” folder and markdown files in the project for agents, so Ad</li> </ul></li> </ul> <p><strong>Extras</strong></p> <p>Michael:</p> <ul> <li><a href="https://lp.jetbrains.com/python-unplugged/?featured_on=pythonbytes">PyTV Python Unplugged This Week</a></li> <li><a href="https://www.techbuzz.ai/articles/ibm-crashes-11-as-anthropic-threatens-cobol-empire?featured_on=pythonbytes">IBM Crashes 11% in 4 Hours</a> - $24 Billion Wiped Out After Anthropic's Claude Code Threatens the Entire COBOL Consulting Industry</li> <li>Loving my <a href="https://www.amazon.com/dp/B0FJYNVR3R?ref_=ppx_hzsearch_conn_dt_b_fed_asin_title_1&featured_on=pythonbytes">40” ultrawide monitor</a> more every day</li> <li><a href="https://updatest.app?featured_on=pythonbytes">Updatest</a> for updating all the mac things</li> <li><a href="https://www.reddit.com/r/macapps/comments/1qwkq38/os_thaw_a_fork_of_ice_menu_bar_manager_for_macos/?featured_on=pythonbytes">Ice has Thawed out</a> (mac menubar app)</li> </ul> <p><strong>Joke: <a href="https://x.com/pr0grammerhum0r/status/2018852032304566331?s=12&featured_on=pythonbytes">House is read-only</a>!</strong></p>

March 01, 2026

Tryton News

Tryton News March 2026

In the last month we focused on fixing bugs, improving the behaviour of things, speeding-up performance issues - building on the changes from our last release. We also added some new features which we would like to introduce to you in this newsletter.

For an in depth overview of the Tryton issues please take a look at our issue tracker or see the issues and merge requests filtered by label.

Changes for the User

Sales, Purchases and Projects

Now we add the web shop URL to sales.

We now add a menu entry for party identifier.

Accounting, Invoicing and Payments

Now we can search for shipments on the invoice line.

In UBL we now set BillingReference to Invoice and Credit Note.

We now improve the layout of the invoice credit form.

Now we enforce the Peppol rule “[BR-27]-The Item net price (BT-146) shall NOT be negative”. So we make sure that the unit price on an invoice line is not negative.

We now add an Update Status button to the Peppol document.

Stock, Production and Shipments

Now we can charge duties for UPS for buyer or seller on import and export defined in the incoterms.

User Interface

Now we allow to re-order tabs in Sao.

In the favourites menu we now display a message how to use it, instead of showing an empty menu.

We also improve the blank state of notifications by showing a message.

Now we add a button in Sao for closing the search filter.

System Data and Configuration

Now we update the required version of python-stdnum to version 2.22 and introduced new party identifier.

New Releases

We released bug fixes for the currently maintained long term support series

7.0 and 6.0, and for the penultimate series 7.8 and 7.6.

Security

Please update your systems to take care of a security related bug we found last month.Changes for the System Administrator

Now we also display the create date and create time in the error list.

We now add basic authentication for user applications. Because in some cases the consumer of the user application may not be able to use the bearer authentication.

Writing compatible HTML for email can be very difficult. MJML provides a syntax to ease the creation of such emails. So now we support the MJML email format in Tryton.

Changes for Implementers and Developers

We now add a timestamp field on ModelStorage for last modified.

Now we introduce a new type of field for SQL expressions: Field.sql_column(tables, Model)

We now allow UserError and UserWarning exceptions to be raised on evaluating button inputs.

Now we replace the extension separator by an underscore in report names used as temporary files.

We now do no longer check for missing parent depends when the One2Many is readonly.

Now we preserve the line numbers when converting doctest files to python files.

1 post - 1 participant

February 28, 2026

Talk Python to Me

#538: Python in Digital Humanities

Digital humanities sounds niche, until you realize it can mean a searchable archive of U.S. amendment proposals, Irish folklore, or pigment science in ancient art. Today I’m talking with David Flood from Harvard’s DARTH team about an unglamorous problem: What happens when the grant ends but the website can’t. His answer, static sites, client-side search, and sneaky Python. Let’s dive in.<br/> <br/> <strong>Episode sponsors</strong><br/> <br/> <a href='https://talkpython.fm/sentry'>Sentry Error Monitoring, Code talkpython26</a><br> <a href='https://talkpython.fm/commandbookapp'>Command Book</a><br> <a href='https://talkpython.fm/training'>Talk Python Courses</a><br/> <br/> <h2 class="links-heading mb-4">Links from the show</h2> <div><strong>Guest</strong><br/> <strong>David Flood</strong>: <a href="https://www.davidaflood.com?featured_on=talkpython" target="_blank" >davidaflood.com</a><br/> <br/> <strong>DARTH</strong>: <a href="https://digitalhumanities.fas.harvard.edu?featured_on=talkpython" target="_blank" >digitalhumanities.fas.harvard.edu</a><br/> <strong>Amendments Project</strong>: <a href="https://digitalhumanities.fas.harvard.edu/projects/amend/?featured_on=talkpython" target="_blank" >digitalhumanities.fas.harvard.edu</a><br/> <strong>Fionn Folklore Database</strong>: <a href="https://fionnfolklore.org/en?featured_on=talkpython" target="_blank" >fionnfolklore.org</a><br/> <strong>Mapping Color in History</strong>: <a href="https://iiif.harvard.edu/projects/mapping-color-in-history/?featured_on=talkpython" target="_blank" >iiif.harvard.edu</a><br/> <strong>Apatosaurus</strong>: <a href="https://apatosaurus.io/?featured_on=talkpython" target="_blank" >apatosaurus.io</a><br/> <strong>Criticus</strong>: <a href="https://github.com/d-flood/criticus?featured_on=talkpython" target="_blank" >github.com</a><br/> <strong>github.com/palewire/django-bakery</strong>: <a href="https://github.com/palewire/django-bakery?featured_on=talkpython" target="_blank" >github.com</a><br/> <strong>sigsim.acm.org/conf/pads/2026/blog/artifact-evaluation</strong>: <a href="https://sigsim.acm.org/conf/pads/2026/blog/artifact-evaluation/?featured_on=talkpython" target="_blank" >sigsim.acm.org</a><br/> <strong>Hugo</strong>: <a href="https://gohugo.io?featured_on=talkpython" target="_blank" >gohugo.io</a><br/> <strong>Water Stories</strong>: <a href="https://waterstories.fas.harvard.edu/?featured_on=talkpython" target="_blank" >waterstories.fas.harvard.edu</a><br/> <strong>Tsumeb Mine Notebook</strong>: <a href="https://tmn.fas.harvard.edu/?featured_on=talkpython" target="_blank" >tmn.fas.harvard.edu</a><br/> <strong>Dharma and Punya</strong>: <a href="https://dharmapunya2019.org/?featured_on=talkpython" target="_blank" >dharmapunya2019.org</a><br/> <strong>Pagefind library</strong>: <a href="https://pagefind.app?featured_on=talkpython" target="_blank" >pagefind.app</a><br/> <strong>django_webassembly</strong>: <a href="https://github.com/m-butterfield/django_webassembly?featured_on=talkpython" target="_blank" >github.com</a><br/> <strong>Astro Static Site Generator</strong>: <a href="https://astro.build?featured_on=talkpython" target="_blank" >astro.build</a><br/> <strong>PageFind Python Lib</strong>: <a href="https://pypi.org/project/pagefind/?featured_on=talkpython" target="_blank" >pypi.org</a><br/> <strong>Frozen-Flask</strong>: <a href="https://frozen-flask.readthedocs.io/en/latest/?featured_on=talkpython" target="_blank" >frozen-flask.readthedocs.io</a><br/> <br/> <strong>Watch this episode on YouTube</strong>: <a href="https://www.youtube.com/watch?v=ZaI2AxRq_OA" target="_blank" >youtube.com</a><br/> <strong>Episode #538 deep-dive</strong>: <a href="https://talkpython.fm/episodes/show/538/python-in-digital-humanities#takeaways-anchor" target="_blank" >talkpython.fm/538</a><br/> <strong>Episode transcripts</strong>: <a href="https://talkpython.fm/episodes/transcript/538/python-in-digital-humanities" target="_blank" >talkpython.fm</a><br/> <br/> <strong>Theme Song: Developer Rap</strong><br/> <strong>🥁 Served in a Flask 🎸</strong>: <a href="https://talkpython.fm/flasksong" target="_blank" >talkpython.fm/flasksong</a><br/> <br/> <strong>---== Don't be a stranger ==---</strong><br/> <strong>YouTube</strong>: <a href="https://talkpython.fm/youtube" target="_blank" ><i class="fa-brands fa-youtube"></i> youtube.com/@talkpython</a><br/> <br/> <strong>Bluesky</strong>: <a href="https://bsky.app/profile/talkpython.fm" target="_blank" >@talkpython.fm</a><br/> <strong>Mastodon</strong>: <a href="https://fosstodon.org/web/@talkpython" target="_blank" ><i class="fa-brands fa-mastodon"></i> @talkpython@fosstodon.org</a><br/> <strong>X.com</strong>: <a href="https://x.com/talkpython" target="_blank" ><i class="fa-brands fa-twitter"></i> @talkpython</a><br/> <br/> <strong>Michael on Bluesky</strong>: <a href="https://bsky.app/profile/mkennedy.codes?featured_on=talkpython" target="_blank" >@mkennedy.codes</a><br/> <strong>Michael on Mastodon</strong>: <a href="https://fosstodon.org/web/@mkennedy" target="_blank" ><i class="fa-brands fa-mastodon"></i> @mkennedy@fosstodon.org</a><br/> <strong>Michael on X.com</strong>: <a href="https://x.com/mkennedy?featured_on=talkpython" target="_blank" ><i class="fa-brands fa-twitter"></i> @mkennedy</a><br/></div>

February 28, 2026 09:28 PM UTC

Graham Dumpleton

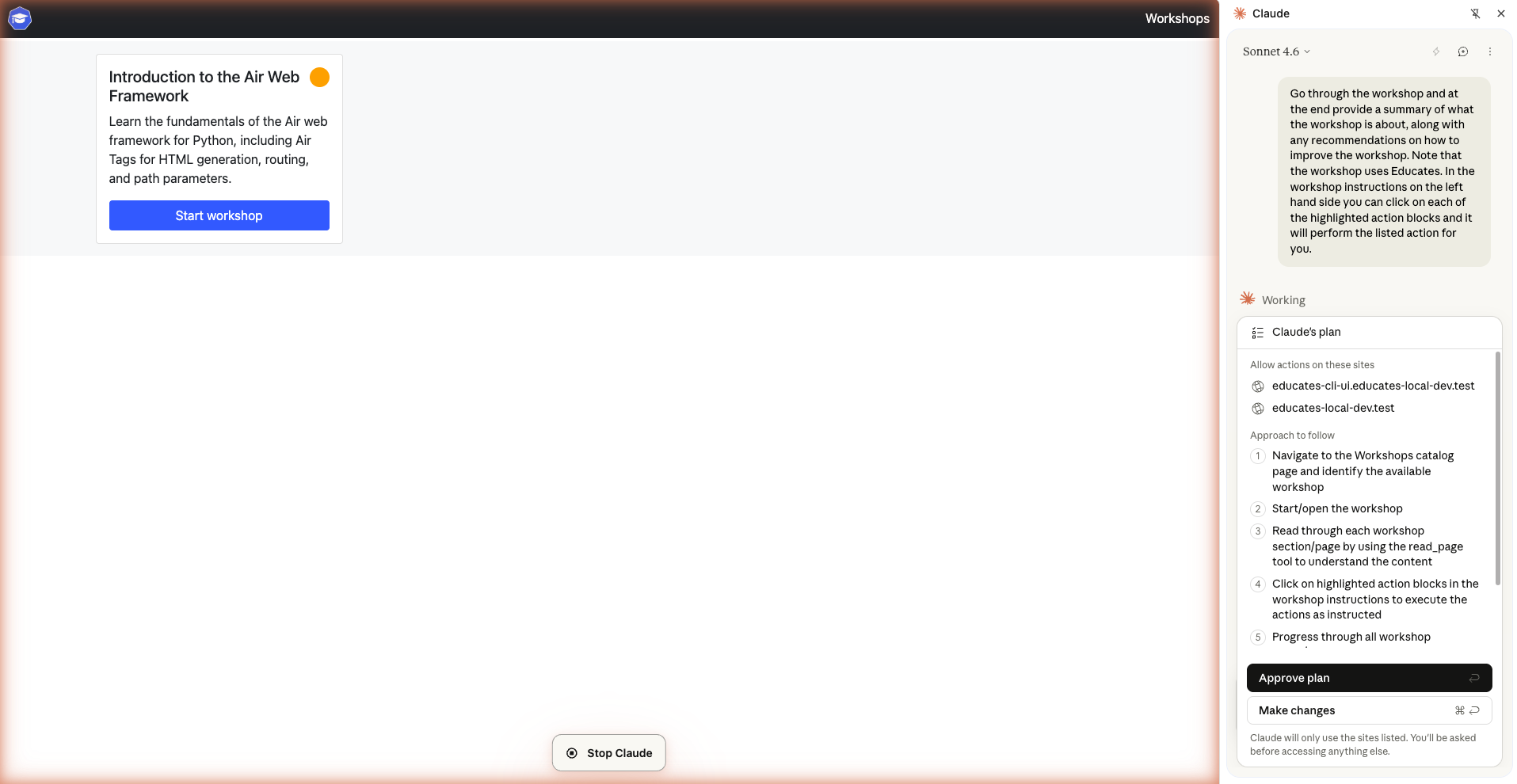

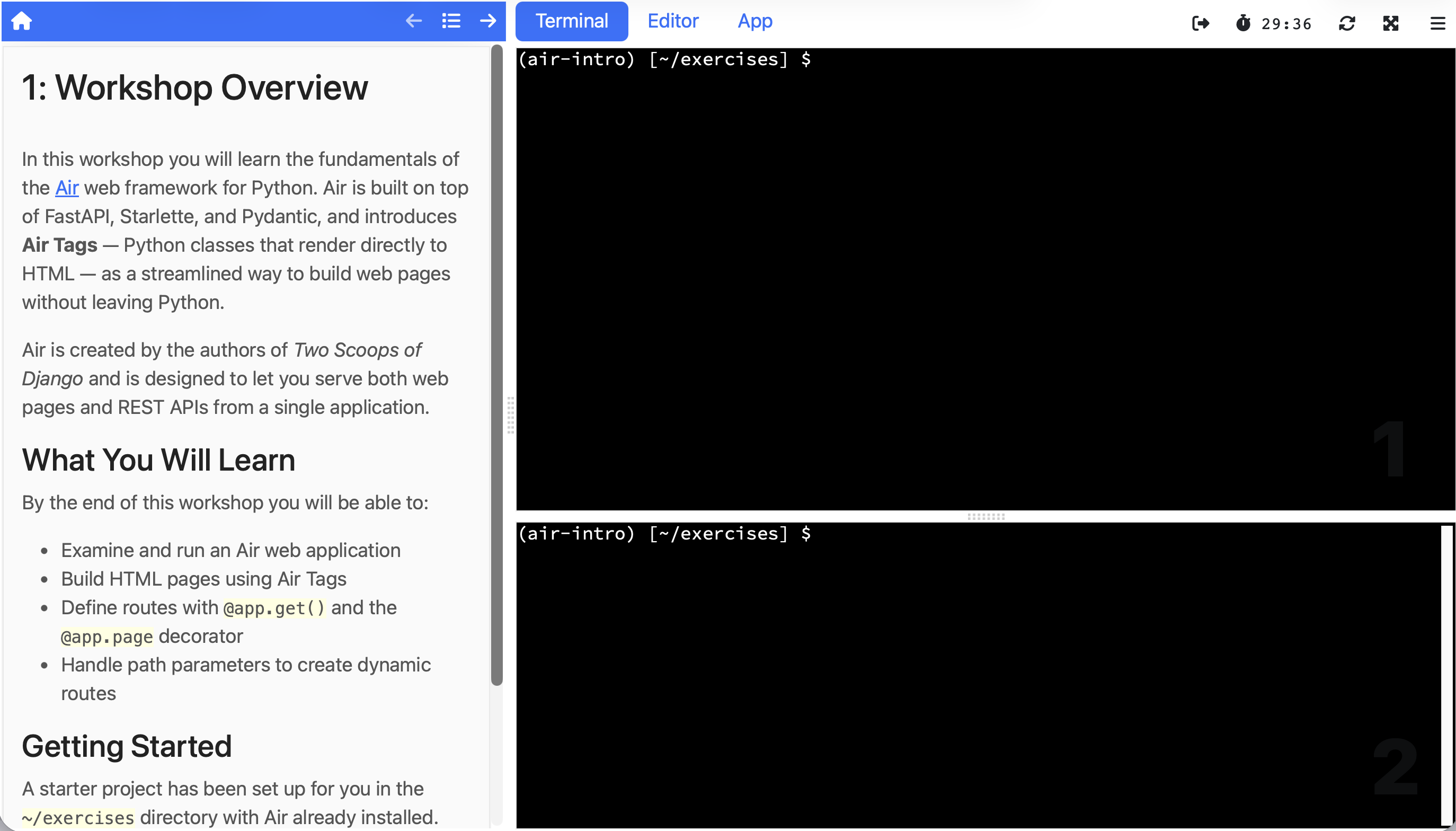

Reviewing workshops with AI

In my previous post I walked through deploying an AI-generated Educates workshop on a local Kubernetes cluster. The workshop was up and running, accessible through the training portal, and ready to be used. But having a workshop that runs is only the first step. The next question is whether it's actually any good.

Workshop review is traditionally a manual process. You open the workshop in a browser, click through each page, read the instructions, run the commands, check that everything works, and make notes on what could be improved. It's time-consuming and somewhat tedious, especially when you're the person who wrote the workshop in the first place and already know what it's supposed to do. Even this task, though, is one where AI can help.

Reviewing the source vs the experience

One option would be to point Claude at the workshop source files directly. Hand it the Markdown content and the YAML configuration and ask it to review the material. This works to a degree, but it only checks the content in isolation. It doesn't tell you anything about how the workshop actually feels when someone uses it.

The real test of a workshop is the experience of navigating it as a learner. How do the instructions read when you're looking at them alongside a terminal and editor? Does the flow between pages make sense? Do the clickable actions appear at the right moments, and is it clear what they do? These are things you can only assess by going through the workshop the way a learner would.

So rather than having Claude review the source files, the better approach is to have it use the workshop in an actual browser.

Claude in the browser

The Claude extension for Chrome can interact with web pages directly. It can read page content, click on elements, scroll, and navigate between pages. That makes it well suited to walking through an Educates workshop end to end.

I pointed it at the Educates training portal with the Air workshop available, and gave it a prompt asking it to go through the workshop and provide a summary along with recommendations for improvement.

The prompt needed to include some explanation of how Educates workshops work, because the browser extension doesn't appear to support Claude skills (as far as I'm aware). That meant I had to tell it that the workshop has clickable action blocks in the instructions panel on the left side, and that it should click on each of those highlighted actions to execute the described tasks, just as a learner would. Without that context, Claude wouldn't know to interact with those elements.

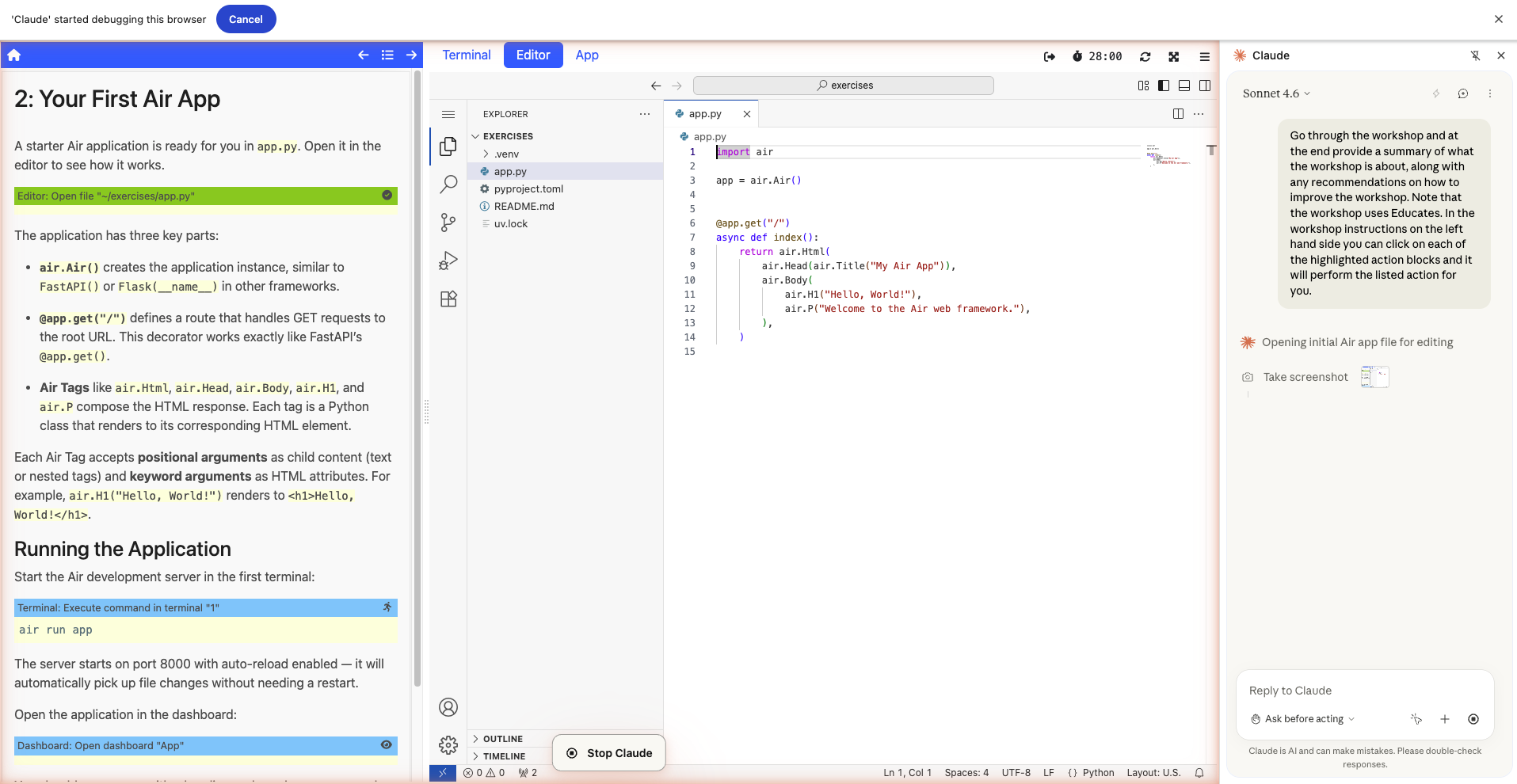

Navigating the workshop

Once Claude had its plan approved, it started working through the workshop. It navigated to the workshop catalog, started the workshop, and began reading through each page of instructions. It scrolled down through longer pages, clicked through to following pages, and because I'd told it about the clickable actions, it clicked on those too, triggering terminal commands and editor actions just as a human learner would.

Claude worked through the entire workshop from start to finish, experiencing it the same way someone encountering it for the first time would. It isn't as quick as if it were reviewing the raw files directly, but it's still faster than a person doing the same thing.

The impartial reviewer

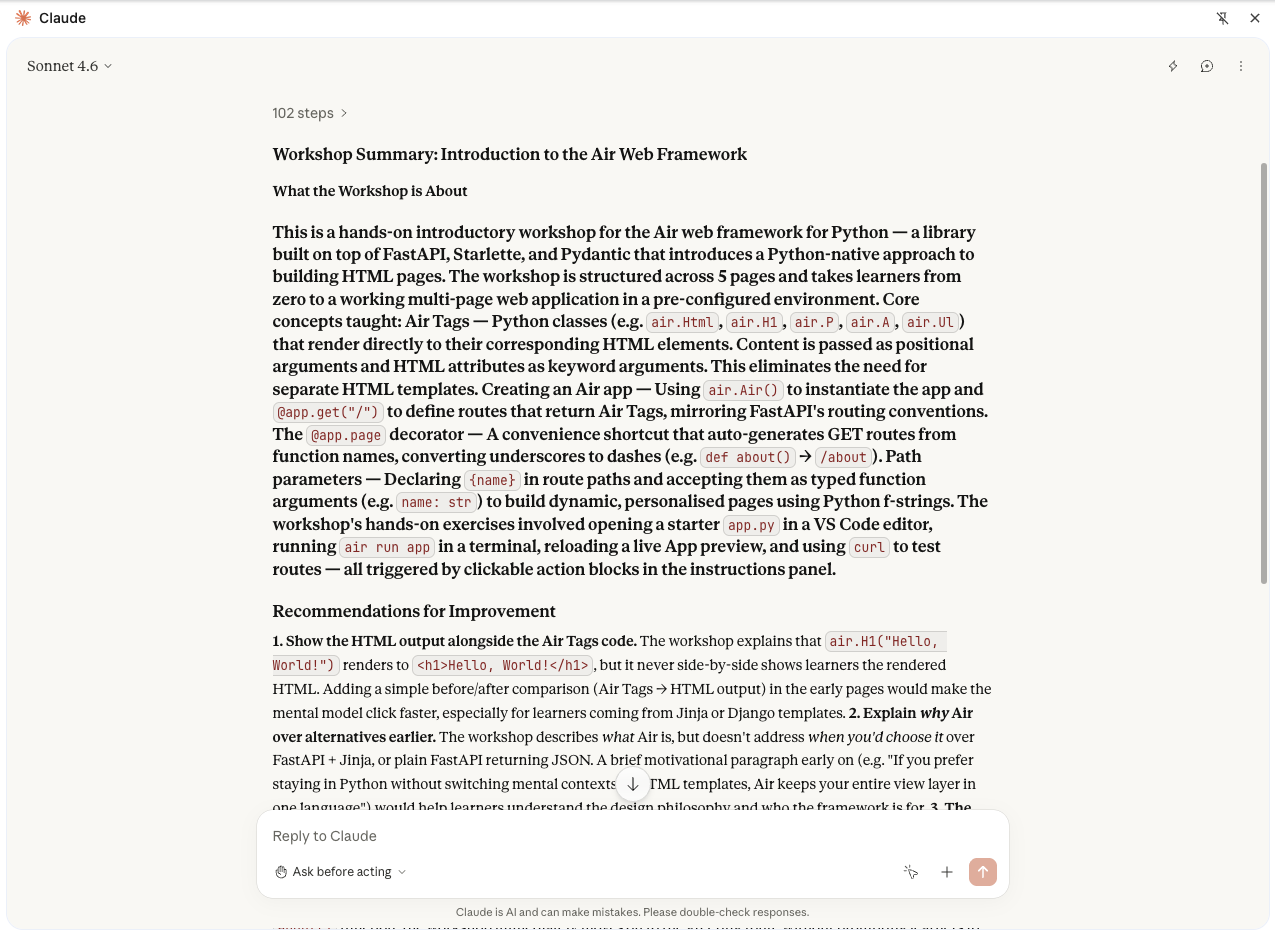

Once Claude had gone through the whole workshop, it provided its review with a summary of what the workshop covers and recommendations for how it could be improved.

What I find most valuable about this is that Claude is impartial in a way that human reviewers often aren't. I have high standards for workshops, being the author of the Educates platform itself, and when I'm reviewing workshops that others have written, if I feel the quality doesn't meet those standards, there's a social pressure not to be too blunt about it. You don't want to discourage people, so you temper your feedback. Most human reviewers do the same, holding back on the more critical observations to be polite.

Claude doesn't have that constraint. It gives you the direct feedback, which in practice means you get more useful observations. The feedback covers multiple dimensions too: the quality of the written content, the overall flow of the workshop, how clickable actions are used and whether their usage is effective or confusing. It can even surface shortcomings in the Educates platform itself, pointing out places where the clickable action mechanisms could work better, which is feedback that's genuinely useful for improving the platform and not just the workshop.

Model selection matters

One thing I noticed is that the quality of the review can vary significantly depending on which model you use. I tried both Sonnet and Opus, and somewhat counterintuitively, Sonnet produced better review output for this particular use case. Bigger doesn't always mean better when the task is focused and specific.

The results can also vary between runs of the same model. Two reviews of the same workshop with the same model and similar prompting can surface different observations or emphasise different aspects. That's not necessarily a problem, but it does mean you should be prepared to iterate. Running the review more than once and comparing what comes back gives you a more complete picture than relying on a single pass.

What could be better

This is a valuable tool, but there are several things that could make it better.

The most impactful would be skills support in the browser extension. Because the extension doesn't support Claude skills, I had to manually explain how Educates workshops work in the prompt. If it supported skills, I could create a dedicated workshop review skill that encapsulates all the context Claude needs: how the workshop dashboard is laid out, what the different types of clickable actions are, how navigation works, and what to look for when reviewing. That would make the prompting much simpler and the review more consistent and thorough.

The review output is also trapped in the browser extension's chat pane. It would be far more useful if Claude could save the analysis to a file, with screenshots linked to specific pieces of feedback so you can see exactly what the AI is referring to when it flags an issue. The extension does supposedly support screen recording to produce an animated GIF of the session, but I couldn't get that to work (possibly a macOS permissions issue). Even if it did work, a recording of the whole session is less useful than targeted screenshots tied to individual observations.

The new Claude remote control feature could also be interesting for this. Claude can work through a workshop faster than a person would, but because the Chrome extension pauses at each step waiting for the browser to fully update, it can still take a while when working through a large workshop, or even a course made up of multiple workshops. During that time you're just watching it work. If you could access the chat remotely, even just with snapshots of what the browser is showing rather than a live view, you could wander away from your desk while you wait and still check on it if you need to.

February 28, 2026 07:39 AM UTC

Seth Michael Larson

“The Legend of Zelda: Link’s Awakening” respects your time

I played “The Legend of Zelda: Link’s Awakening” for the first time in January and early February. The game took me 13 hours to complete the main story and a few optional side quests. I started playing the game on Nintendo Classics for the Game Boy Color, but then remembered there was a Nintendo Switch remake. I bought the game for $30 on eBay and three days later was playing again.

Days played between January 1st and Februrary 8th, 2026. Blue is Nintendo Classics, Red is Nintendo Switch edition.

I don't play a lot of Legend of Zelda games. Before Link’s Awakening, I've only completed Wind Waker, Four Swords Adventures, and Phantom Hourglass. My hesitation to many Zelda games is that they are large and expansive, which would require perhaps too much diligence to complete given my schedule. According to “How Long to Beat”, the main story for Breath of the Wild is ~50 hours. Given my current “pace of play” I would be playing just one game for the entire year.

Link’s Awakening is set in the compact pocket world of Koholint Island. The entire world map takes only a few minutes to walk from edge-to-edge even without the teleportation tool you receive part-way through the story. There is no space left unused, every square of the island feels deliberate. The entire world map fits into 16x16 screens of 10x8 tiles per screen for a total of 2560x2048 pixels. This is fewer total pixels than my laptop screen (3456x2160).

I played 3 sessions on Nintendo Classics and 17 sessions on the Nintendo Switch remake, averaging around 45 minutes per play session. I really appreciated how this game fit into my life, being able to make concrete progress even in shorter play sessions between 15-30 minutes. There's a telephone booth in-game where you can talk to a shy old man and get a reminder about what you're meant to be doing next. This mechanic was super useful picking the game up after not playing for a few days.

Inspired by and racing YouTubers

I was originally inspired to play Link’s Awakening after the glowing review from “videogamedunkey” in 2023. Just a few weeks after I started playing myself, the “Let’s Play”-er I've been subscribed to for the longest on YouTube, raocow, began playing Link’s Awakening, too. So now my play was put on the clock. I wanted to continue watching raocow's daily videos, so I had to keep up in my own game.

Watching raocow play on the Game Boy Color version made me feel even better about my choice to play the Nintendo Switch remake. Otherwise, you spend so much time in menus swapping your tools! I also learned how to enter the Color Dungeon, an optional dungeon added in the “DX” re-release for the Game Boy Color, by watching raocow's play-through. Without this I probably wouldn't have known the dungeon was available so soon in the main story.

There were little things here and there that I accomplished differently, too. Like defeating the enemies within the Dream Shrine using a sword spin attack instead of the Pegasus Boots. Apparently these enemies are called Arm-Mimics, they look like gourds to me.

What did I miss?

Despite the heavy optional hand-holding in the game through in-game hints, I still needed to look-up solutions a few times in my play-through. Here are a few things I missed:

- I didn't know multiple things about the sword! You can use the sword to “tap” walls and listen for different sounds to detect hidden walls to destroy. You can also use the sword spin attack right away.

- The seventh dungeon was a big puzzle, and my solution to the dungeon felt on accident almost?

- The eighth dungeon had many staircases and “side-scrolling” sections which aren't represented helpfully on the dungeon map. I needed to painstakingly check every staircase multiple times to find the place I intended to be when split across play sessions.

- The final dungeon starts by requiring you to learn secret directions from a book tucked away at the beginning of the game. I had no idea about this and had to search for the solution.

Other than this, the entire game went very smoothly and I didn't feel lost for the vast majority. Nice work, game designers!

What Legend of Zelda games are similar?

What Legend of Zelda games are most similar to Link’s Awakening in terms of popularity and time to beat? I used the ranking from the publication Zelda Dungeon, which I learned from following the personal blog of one of their writers: Evan Hahn. Approximate time to beat was taken from How Long to Beat. Games that take 15 hours or fewer to complete are highlighted in green.

| Rank | Game | Hours | Beat? |

|---|---|---|---|

| 1 | Breath of the Wild | 50 | |

| 2 | Ocarina of Time | 30 | |

| 3 | Tears of the Kingdom | 60 | |

| 4 | Twilight Princess | 40 | |

| 5 | The Wind Waker | 30 | X |

| 6 | A Link Between Worlds | 15 | |

| 7 | Majora's Mask | 20 | |

| 8 | Skyward Sword | 30 | |

| 9 | Link's Awakening | 15 | X |

| 10 | Echoes of Wisdom | 20 | |

| 11 | A Link to the Past | 15 | |

| 12 | Minish Cap | 15 | |

| 13 | Oracle of Seasons | 15 | |

| 15 | Oracle of Ages | 15 | |

| 16 | Spirit Tracks | 20 | |

| 17 | Four Swords Adventures | 15 | X |

| 18 | Phantom Hourglass | 20 | X |

| 20 | Four Swords | 2 | |

| 21 | Tri Force Heroes | 15 |

Looking at this table, it looks like the most acclaimed Zelda games that are “shorter” and that I haven't played are in order: A Link Between Worlds (3DS), A Link to the Past (SNES), Minish Cap (GBA), and Oracle of Seasons and Ages (GBC).

A Link Between Worlds being on the 3DS is unfortunate, because it's one of the few Nintendo consoles that I don't own and is apparently becoming more and more popular due to the modding community. Maybe I'll wait for 3DS to come to Nintendo Classics? :(

A Link to the Past, Minish Cap, and Oracle of Ages & Seasons are all available on Nintendo Classics. I own a genuine Oracle of Seasons cartridge, so I can dump the ROM and play on my phone using Delta. I think I'll be playing Link to the Past on Nintendo Classics and then playing Oracle of Seasons once the GameSir “Pocket Taco” pre-orders ship in March.

What is your favorite Legend of Zelda game and why? Let me know via email or on Mastodon.

Thanks for keeping RSS alive! ♥

February 28, 2026 12:00 AM UTC

February 27, 2026

EuroPython

Humans of EuroPython: Daksh P. Jain

Behind every attendee registered, there&aposs a community. Behind every talk, a team. EuroPython isn&apost just a conference—it&aposs a labor of grace, 100% volunteer-powered.

To the wranglers who herded sessions, the code guardians updating the conference website, the social magicians leading events, the quiet heroes debugging ticket sales systems—thank you. You’re not just volunteers; you’re the open-source spirit in human form.

In today&aposs interview we&aposd like to highlight contributions of Daksh P. Jain, member of the Communications & Design Team at EuroPython 2025.

Thank you so much, Daksh!

Daksh P. Jain, member of the Communications & Design Team at EuroPython 2025

Daksh P. Jain, member of the Communications & Design Team at EuroPython 2025EP: Did you learn any new skills while volunteering at EuroPython? If so, which ones?

I was doing lots of designs for EuroPython - website, stickers, badge, etc., so I definitely expanded my design horizon more. Designing for thousands of attendees forced me to think more about clarity, accessibility, and consistency than I normally do (and now after the conference, I learned more about where and how I can do even better!)

On a personal level, I learned how to work with multiple teams together, and also became more confident in taking initiative, communicating my ideas clearly, and trusting my judgment.

EP: What&aposs one thing about the programming community that made you want to give back by volunteering?

I’ve been into communities for a very very long time and I can certainly say that communities have shaped me into who I am today. I wouldn’t have learnt Python if it weren’t for a mentor I found in the community who believed in me. I wouldn’t have been organizing PyDelhi if it weren’t for the past organizers of PyDelhi who wanted me to take a step ahead. I also wouldn’t have been at EuroPython or doing a consulting/freelancing role or finding work or just being who I am without communities.

There are a lot of people who found the potential in me and wanted to see me grow, and I did (and still am!) And I want to do the same for someone else, potentially more than just a few people. And volunteering in larger communities and conferences boosts that contribution. You may not see it, but somewhere your work might be helping or shaping someone very slightly, and over the years, everything adds up to become bigger (and gives a ripple effect).

EP: Did you have any unexpected or funny experiences during the EuroPython?

There are 2 moments that are the most memorable for me:

1. We were trying to print and laminate the EuroPython 2026 ticket, to give to the person who wins the quiz. Unfortunately the paper got jammed in the printer and we were in a bit of a hurry. Initially it was just me and one person trying to fix the printer, soon enough more people joined. Eventually it took 6 software engineers and 1 YouTube video to fix the printer jam, which was as simple as removing a lid from the bottom and taking the paper out, but none of us could figure that out 😂

2. Having real conversations. I usually expect conferences to be formal where people only talk about work, and maybe do a bit of fun on the side, but not proper real conversations. Turns out I was wrong, I was able to have really beautiful (and full of depth) conversations with a few people, and those conversations till date come to mind and help me in certain situations! I’m certainly grateful for that, and this was very unexpected!

EP: What surprised you most about the volunteer experience?

I was surprised by the amount of trust and ownership given to volunteers. Even as someone new, I felt encouraged to take initiative, make decisions, and contribute beyond just “assigned tasks.” It didn’t feel like volunteering on the sidelines, it felt like being part of the core team. Also since the scale of EuroPython is massive, what surprised me was how calmly things were handled behind the scenes. Even when something went wrong, people collaborated instead of panicking. And also how well everything was planned on Google Sheets, everything from time slots to who will do what, and it was very smooth.

EP: If you could add one thing to make the volunteer experience even better, what would it be?

More ice breakers and fun activities in-person with all volunteers! I met a lot of new people and I felt that sometimes they were a little hesitant because I was unfamiliar to them, and the reverse happened with me as well, sometimes I felt a little hesitant as well. It would be great if all the volunteers and organizers could get some extra time out to just socialize and know each other better. This would make the volunteering experience a little better, and more comfortable! I’m happy to pitch in some ideas that I have seen work in the community.

EP: What would you say to someone considering volunteering at EuroPython but feeling hesitant?

My best advice would be to fight your inner thoughts a bit, and just honestly go for it! There are 2 major reasons I’d suggest anyone to try it at least once:

1. The learning and experience you get from being part of organizing something so large is really amazing. Not only do you see how things are worked out in the back end, how many people and the way people collaborate together and how the chaos is handled, it’s close to chaos engineering but in real life :P And that is a lot of fun! You might take away a lot from the chaos engineering IRL and understanding and doing people-oriented/community work in general.

2. You make really amazing friends. Personally, I made some really amazing memories with fellow volunteers while I was at EuroPython. We played games together, jammed together at the social event, attended talks and went to explore the city. If you are lucky enough, you might also find friends who you are able to share deeper thoughts and problems with, and they do it too!

Oh and did I mention everyone is very welcoming and friendly? When I joined for the first time, especially as a remote volunteer sitting in another continent who had absolutely no idea of how the conference actually works, I felt very welcome and the team helped me in understanding everything about EuroPython. There’s a lot of room for new people to pitch in ideas and own them, and get support from everyone :)

EP: What stayed with you after the conference ended?

For me, the relationships, conversations, and sense of belonging lasted far beyond the event itself, and that’s something I didn’t expect going in. I also took away a deeper appreciation for “real tech” work, people building and improving core tools and systems, not just solving product- or client-specific problems (what I do, although I know it is equally important). Coming from the Indian community ecosystem, where conferences often lean more towards services because of our largely service-based economy, it was refreshing to hear first-hand stories about things like making a better Django ORM alternative, the trade-offs involved, and the real problems faced while building foundational technology. That perspective has stayed with me and influenced how I think about my own work.

EP: Thank you for your contribution, Daksh!

February 27, 2026 07:56 PM UTC

Real Python

The Real Python Podcast – Episode #286: Overcoming Testing Obstacles With Python's Mock Object Library

Do you have complex logic and unpredictable dependencies that make it hard to write reliable tests? How can you use Python's mock object library to improve your tests? Christopher Trudeau is back on the show this week with another batch of PyCoder's Weekly articles and projects.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

February 27, 2026 12:00 PM UTC

Quiz: Dependency Management With Python Poetry

Test your understanding of Dependency Management With Python Poetry.

You’ll revisit how to install Poetry the right way, create new projects, manage virtual environments, declare and group dependencies, work with lock files, and keep your dependencies up to date.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

February 27, 2026 12:00 PM UTC

Ned Batchelder

Pytest parameter functions

Pytest’s parametrize is a great feature for writing tests without repeating yourself needlessly. (If you haven’t seen it before, read Starting with pytest’s parametrize first). When the data gets complex, it can help to use functions to build the data parameters.

I’ve been working on a project involving multi-line data, and the parameterized test data was getting awkward to create and maintain. I created helper functions to make it nicer. The actual project is a bit gnarly, so I’ll use a simpler example to demonstrate.

Here’s a function that takes a multi-line string and returns two numbers, the lengths of the shortest and longest non-blank lines:

def non_blanks(text: str) -> tuple[int, int]:

"""Stats of non-blank lines: shortest and longest lengths."""

lengths = [len(ln) for ln in text.splitlines() if ln]

return min(lengths), max(lengths)

We can test it with a simple parameterized test with two test cases:

import pytest

from non_blanks import non_blanks

@pytest.mark.parametrize(

"text, short, long",

[

("abcde\na\nabc\n", 1, 5),

("""\

A long line

The next line is blank:

Short.

Much much longer line, more than anyone thought.

""", 6, 48),

]

)

def test_non_blanks(text, short, long):

assert non_blanks(text) == (short, long)

I really dislike how the multi-line string breaks the indentation flow, so I wrap strings like that in textwrap.dedent:

@pytest.mark.parametrize(

"text, short, long",

[

("abcde\na\nabc\n", 1, 5),

(textwrap.dedent("""\

A long line

The next line is blank:

Short.

Much much longer line, more than anyone thought.

"""),

6, 48),

]

)

(For brevity, this and following examples only show the parametrize decorator, the test function itself stays the same.)

This looks nicer, but I have to remember to use dedent, which adds a little bit of visual clutter. I also need to remember that first backslash so that the string won’t start with a newline.

As the test data gets more elaborate, I might not want to have it all inline in the decorator. I’d like to have some of the large data in its own file:

@pytest.mark.parametrize(

"text, short, long",

[

("abcde\na\nabc\n", 1, 5),

(textwrap.dedent("""\

A long line

The next line is blank:

Short.

Much much longer line, more than anyone thought.

"""),

6, 48),

(Path("gettysburg.txt").read_text(), 18, 80),

]

)

Now things are getting complicated. Here’s where a function can help us. Each test case needs a string and three numbers. The string is sometimes provided explicitly, sometimes read from a file.

We can use a function to create the correct data for each case from its most convenient form. We’ll take a string and use it as either a file name or literal data. We’ll deal with the initial newline, and dedent the multi-line strings:

def nb_case(text, short, long):

"""Create data for test_non_blanks."""

if "\n" in text:

# Multi-line string: it's actual data.

if text[0] == "\n": # Remove a first newline

text = text[1:]

text = textwrap.dedent(text)

else:

# One-line string: it's a file name.

text = Path(text).read_text()

return (text, short, long)

Now the test data is more direct:

@pytest.mark.parametrize(

"text, short, long",

[

nb_case("abcde\na\nabc\n", 1, 5),

nb_case("""

A long line

The next line is blank:

Short.

Much much longer line, more than anyone thought.

""",

6, 48),

nb_case("gettysburg.txt", 18, 80),

]

)

One nice thing about parameterized tests is that pytest creates a distinct ID for each one. The helps with reporting failures and with selecting tests to run. But the ID is made from the test data. Here, our last test case has an ID using the entire Gettysburg Address, over 1500 characters. It was very short for a speech, but it’s very long for an ID!

This is what the pytest output looks like with our current IDs:

test_non_blank.py::test_non_blanks[abcde\na\nabc\n-1-5] PASSED

test_non_blank.py::test_non_blanks[A long line\nThe next line is blank:\n\nShort.\nMuch much longer line, more than anyone thought.\n-6-48] PASSED

test_non_blank.py::test_non_blanks[Four score and seven years ago our fathers brought forth on this continent, a\nnew nation, conceived in Liberty, and dedicated to the proposition that all men\nare created equal.\n\nNow we are engaged in a great civil war, testing whether that nation, or any\nnation so conceived and so dedicated, can long endure. We are met on a great\nbattle-field of that war. We have come to dedicate a portion of that field, as a\nfinal resting place for those who here gave their lives that that nation might\nlive. It is altogether fitting and proper that we should do this.\n\nBut, in a larger sense, we can not dedicate \u2013 we can not consecrate we can not\nhallow \u2013 this ground. The brave men, living and dead, who struggled here, have\nconsecrated it far above our poor power to add or detract. The world will little\nnote, nor long remember what we say here, but it can never forget what they did\nhere. It is for us the living, rather, to be dedicated here to the unfinished\nwork which they who fought here have thus far so nobly advanced. It is rather\nfor us to be here dedicated to the great task remaining before us that from\nthese honored dead we take increased devotion to that cause for which they gave\nthe last full measure of devotion \u2013 that we here highly resolve that these dead\nshall not have died in vain that this nation, under God, shall have a new birth\nof freedom \u2013 and that government of the people, by the people, for the people,\nshall not perish from the earth.\n-18-80] PASSED

Even that first shortest test has an awkward and hard to use test name.

For more control over the test data, instead of creating tuples to use as test cases, you can use pytest.param to create the internal parameters object that pytest needs. Each of these can have an explicit ID assigned. Pytest will still assign an ID if you don’t provide one.

Here’s an updated nb_case() function using pytest.param:

def nb_case(text, short, long, id=None):

if "\n" in text:

# Multi-line string: it's actual data.

if text[0] == "\n": # Remove a first newline

text = text[1:]

text = textwrap.dedent(text)

else:

# One-line string: it's a file name.

id = id or text

text = Path(text).read_text()

return pytest.param(text, short, long, id=id)